You're reading the documentation for a development version. For the latest released version, please have a look at master.

Mod Finder Node

Overview

The DaoAI Mod Finder engine used an image pattern feature to find the object in 2D space, then used 3D space conversion to map it to 3D space. Usually at the end, it will go through point cloud alignment node to improve the 3D position accuracy.

The Mod Finder can be configured to find 2D or 3D models.

Input and Output

Input |

Type |

Description |

|---|---|---|

Image (2D Mod Finder) |

Map<Image> |

The source that is used to search for model. |

or |

||

DA_Depth_Cloud_result (3D Mod Finder) |

Da_Depth_N_Cloud_Conv_Result |

|

Output |

Type |

Description |

|---|---|---|

labelledPose2dSequence |

Vec<Pose2D> |

Vector of pose 2d preserving order from labelled mask sequence. |

labelledPose3dSequence |

Vec<Pose3D> |

Vector of pose 3d preserving order from labelled mask sequence. |

modelMasks |

Map<Image> |

A map of model masks. |

modelPoses2D |

Map<Vec<Pose2D>> |

A map of vector of 2d poses. |

modelPoses3D |

Map<Vec<Pose3D>> |

A map of vector of 3d poses. |

numFound |

Int |

The total number of occurrences found. |

result |

ModFinderResult |

A map, mapping “model_name” to “vector of occurrences of this model”. |

success |

Bool |

Boolean value indicating the search is successful. |

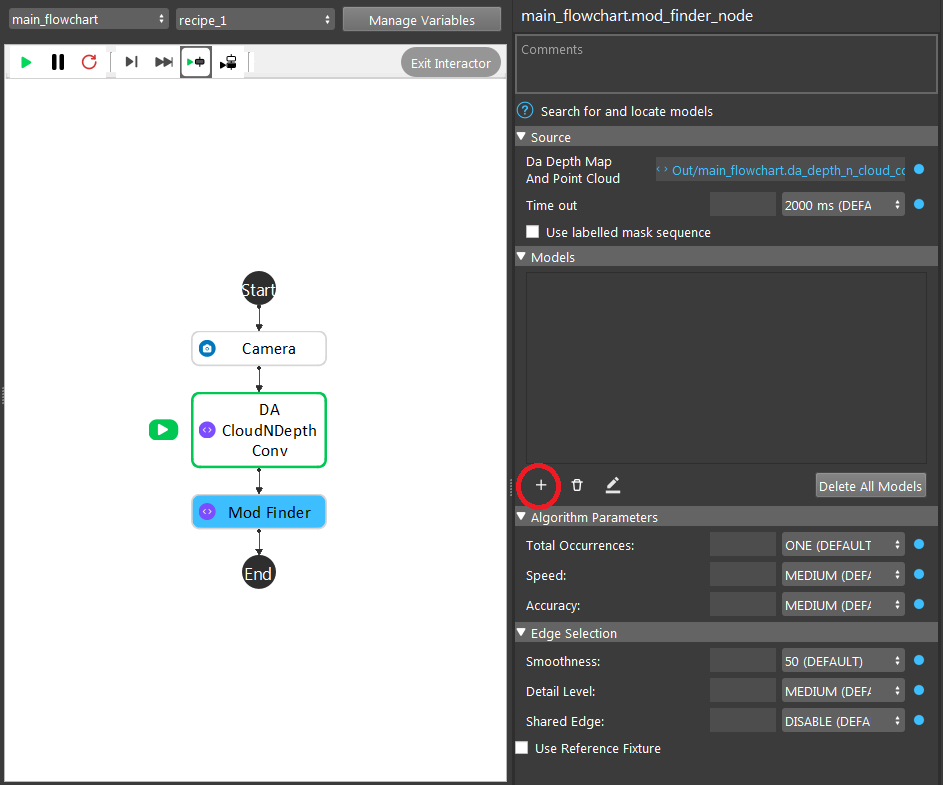

Node Settings

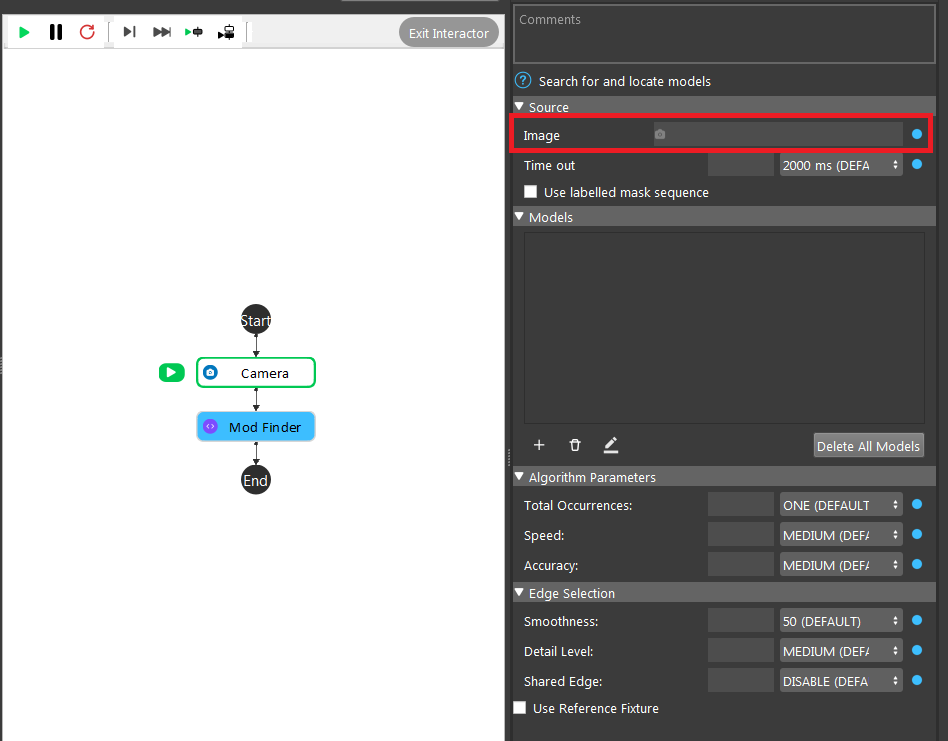

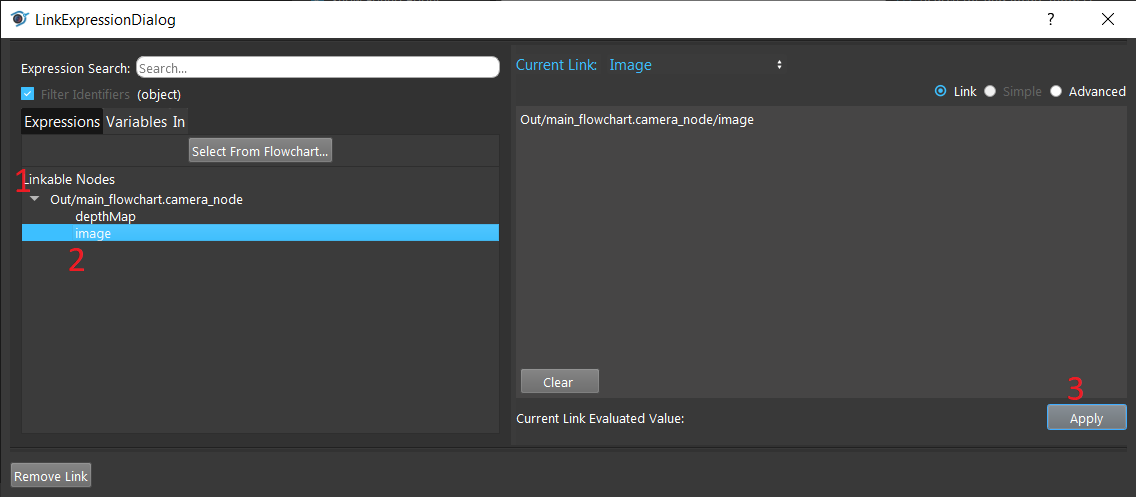

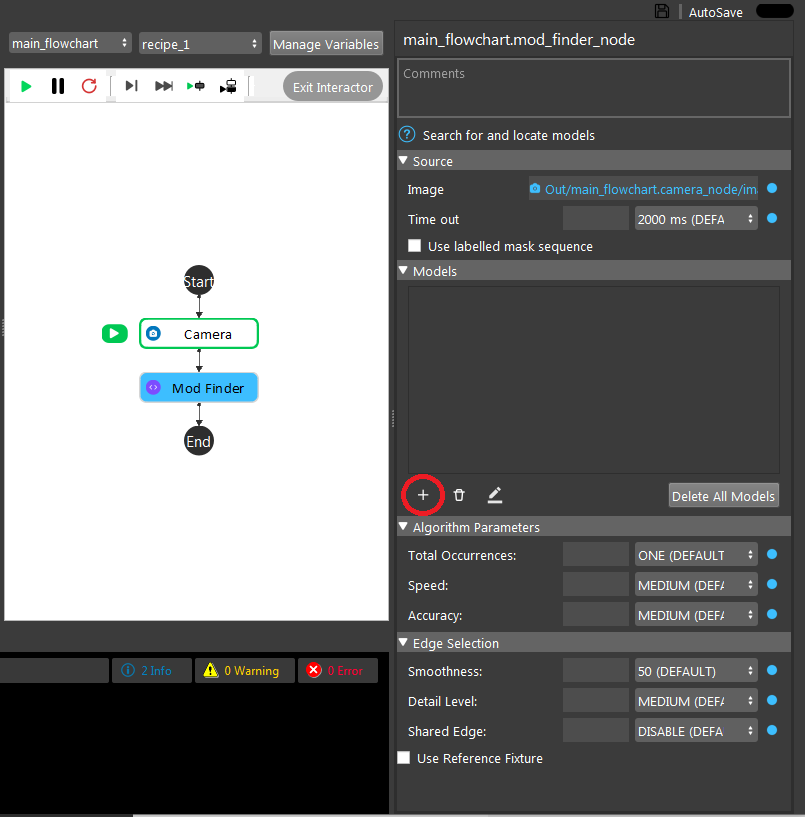

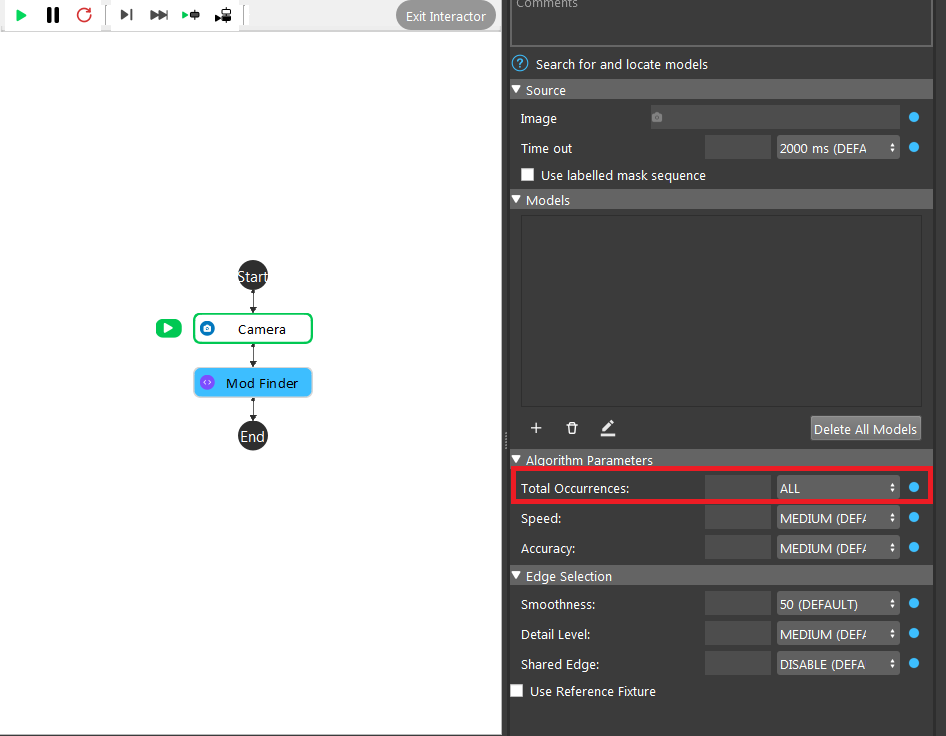

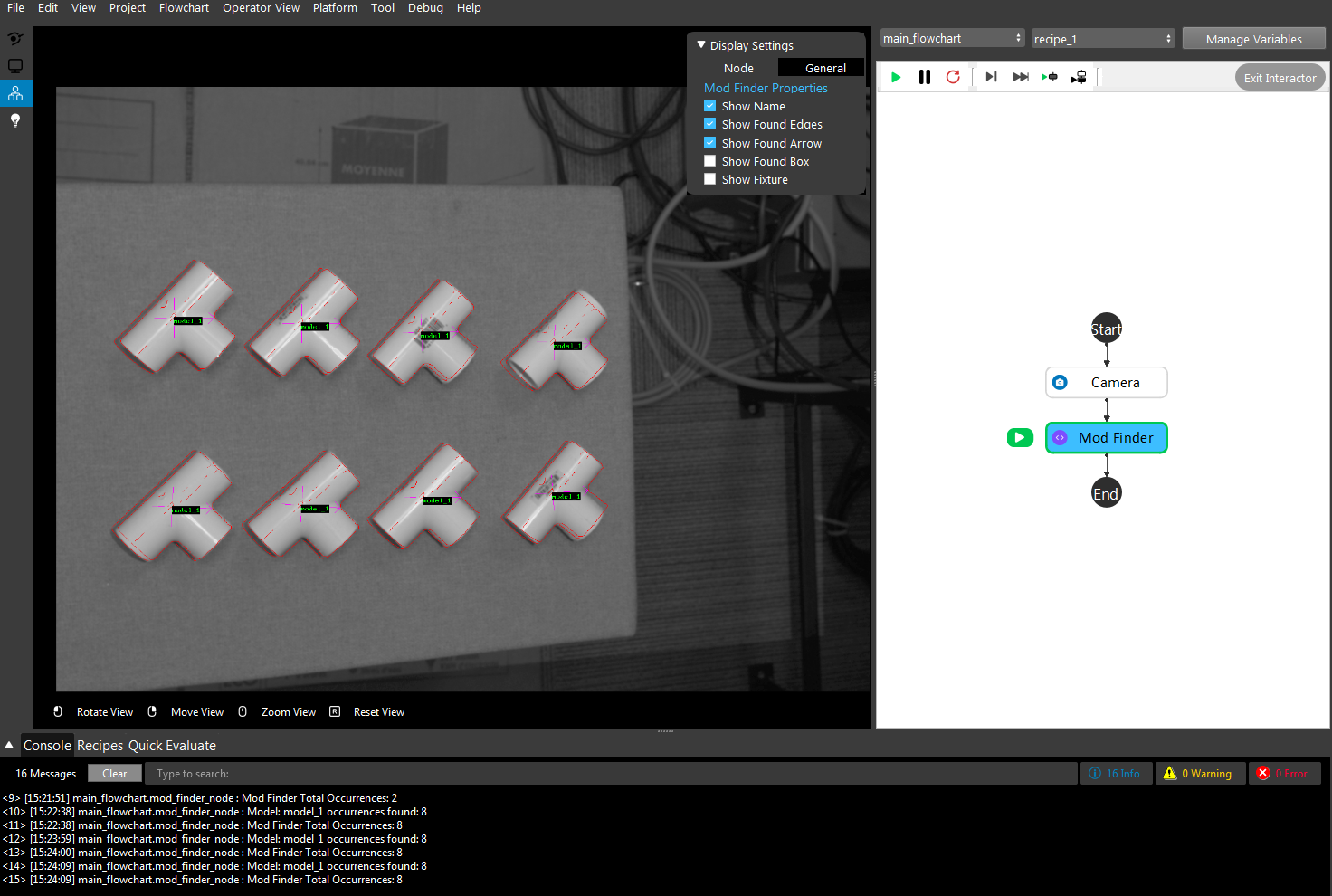

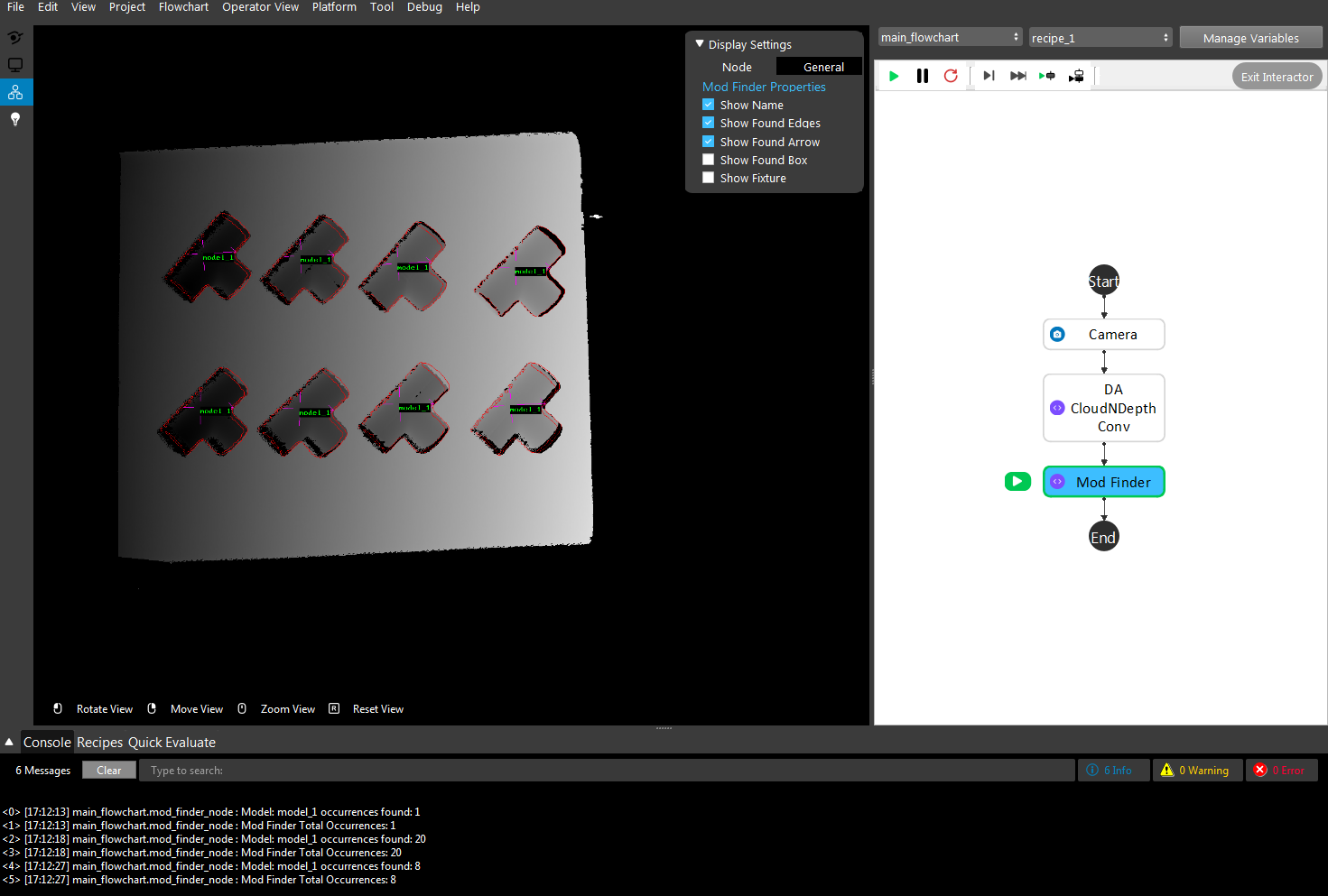

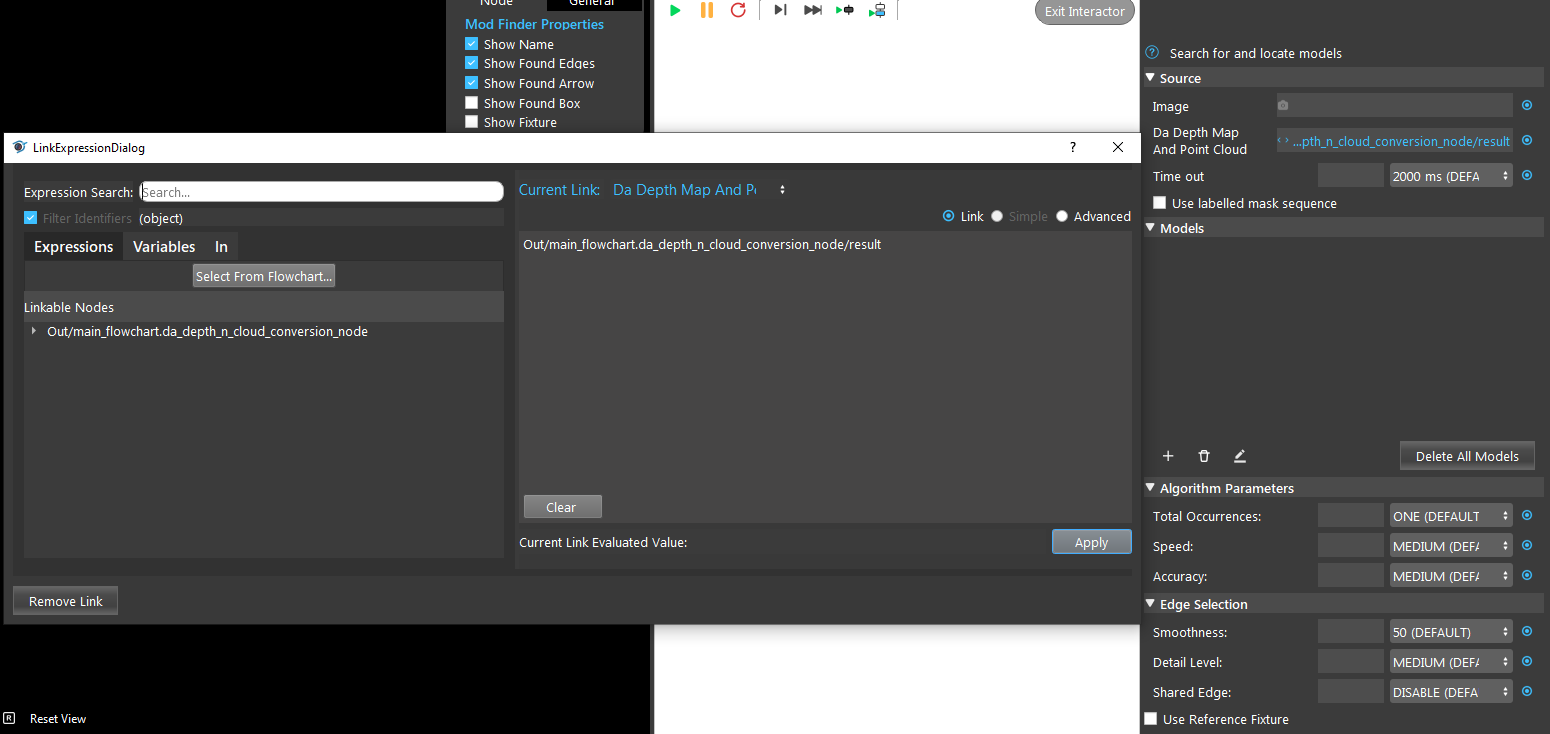

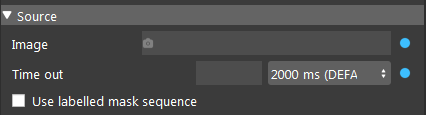

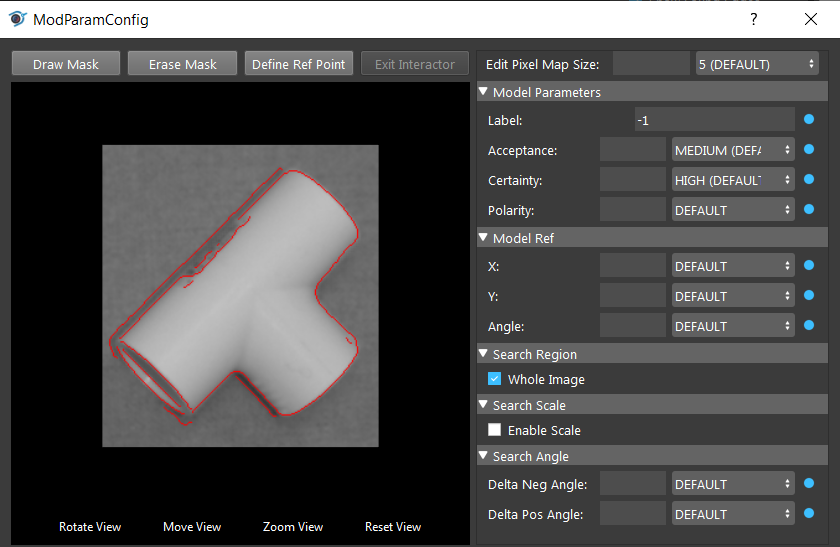

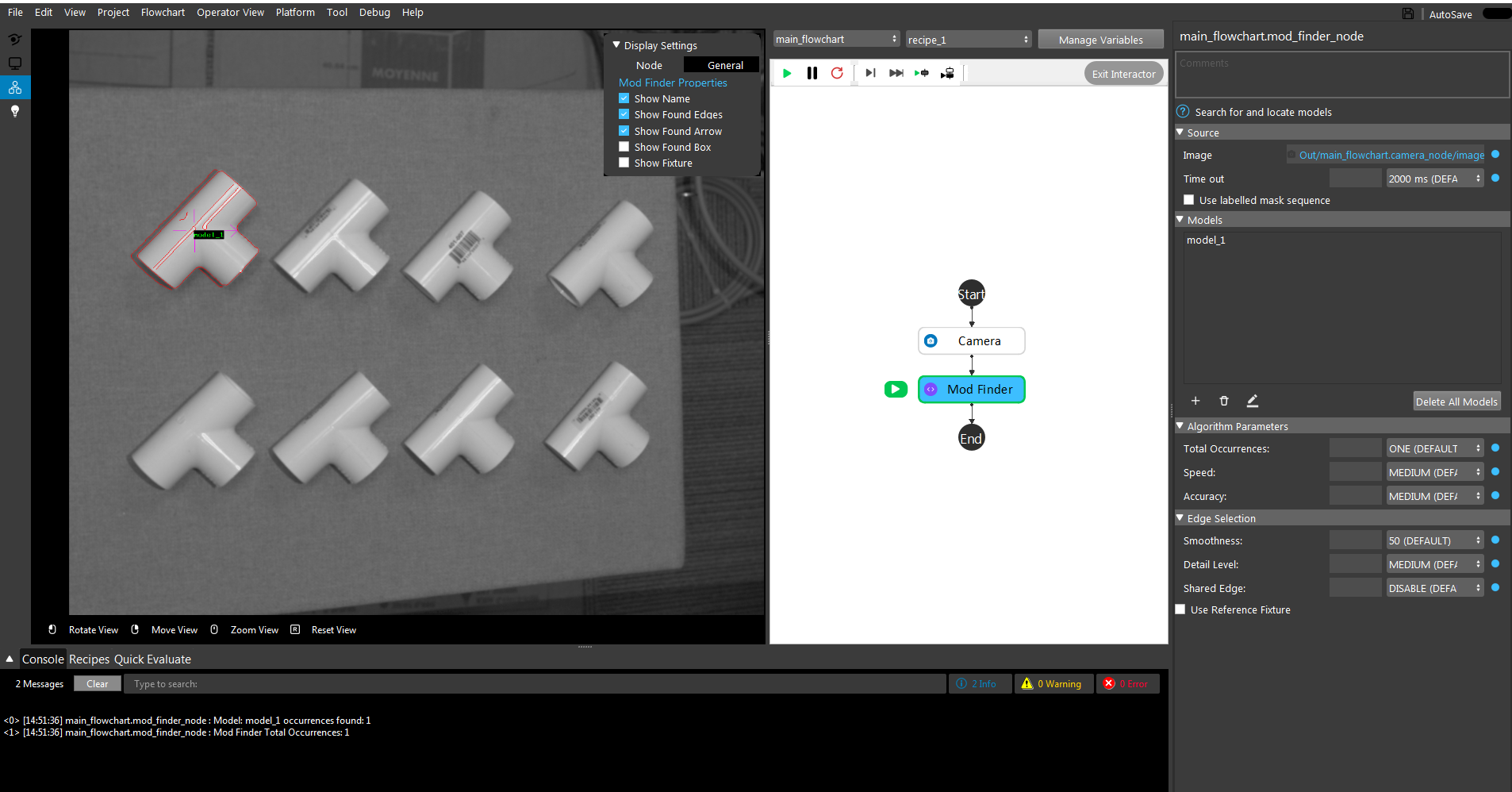

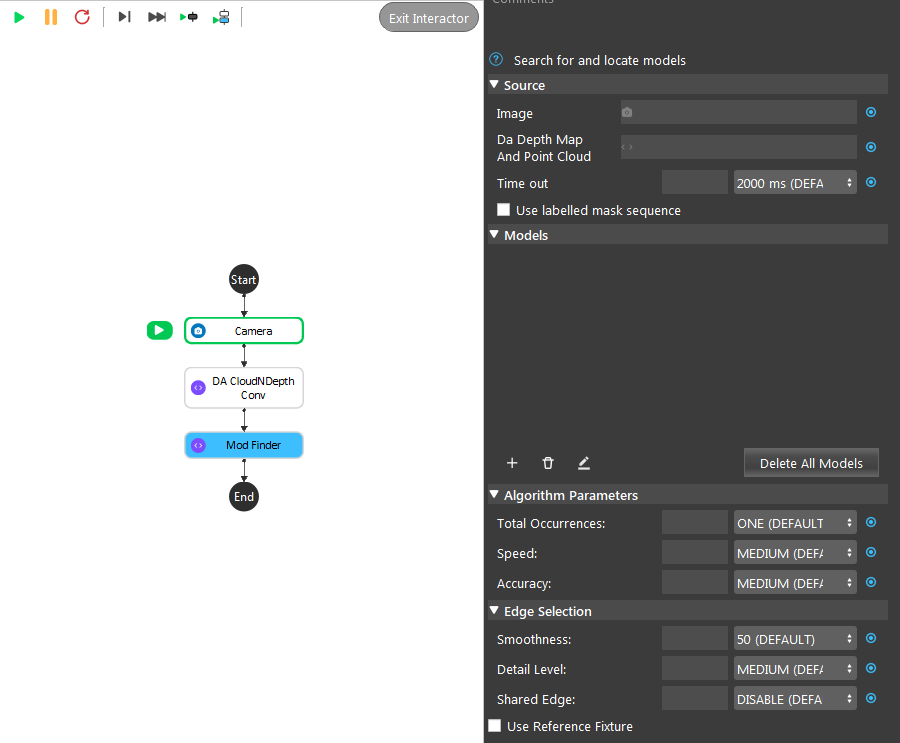

The following screen shots demonstrate the settings of the 2D mod finder.

The only difference between the settings of 2D mod finder and the 3D mod finder is the “Image” input in the “Source” section for 2D is replaced by “Da Depth Map And Point Cloud” for 3D.

Source Parameters

Image:

The source that is used to search for model. Using link expression to link the image.

Time Out: (Default value: 2000 ms)

The time limit for the node to run. When the running time of the node reaches the time limit. The node will terminate and return the current output.

Use labelled mask sequence:

Refer to the “Search Model In Labelled Mask Sequence” section below.

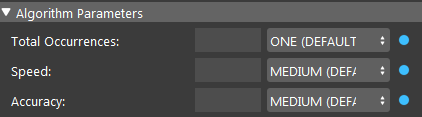

Algorithm Parameters

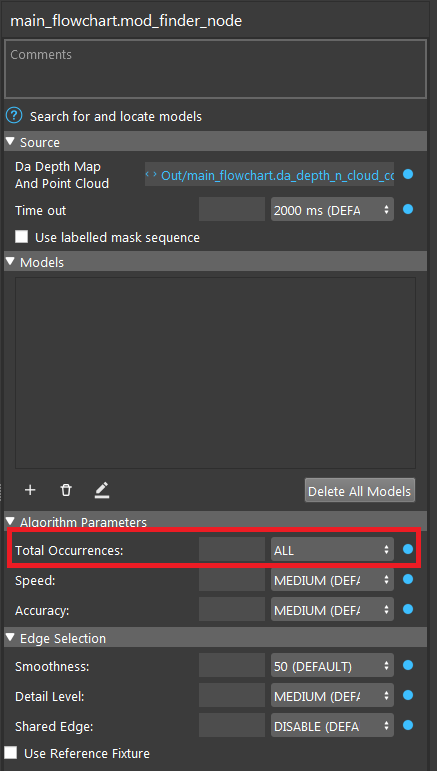

Total Occurrences: Range [0,∞) (Default value: ONE)

The number of occurrences for each model to search. The occurrence can be set to any positive number. If the number of detected objects is larger than the total occurrences number, the objects with the highest acceptance will be returned. If the occurrence number is larger than the number of detected objects, all of them will show. The Total Occurrences can be set to “ALL”.

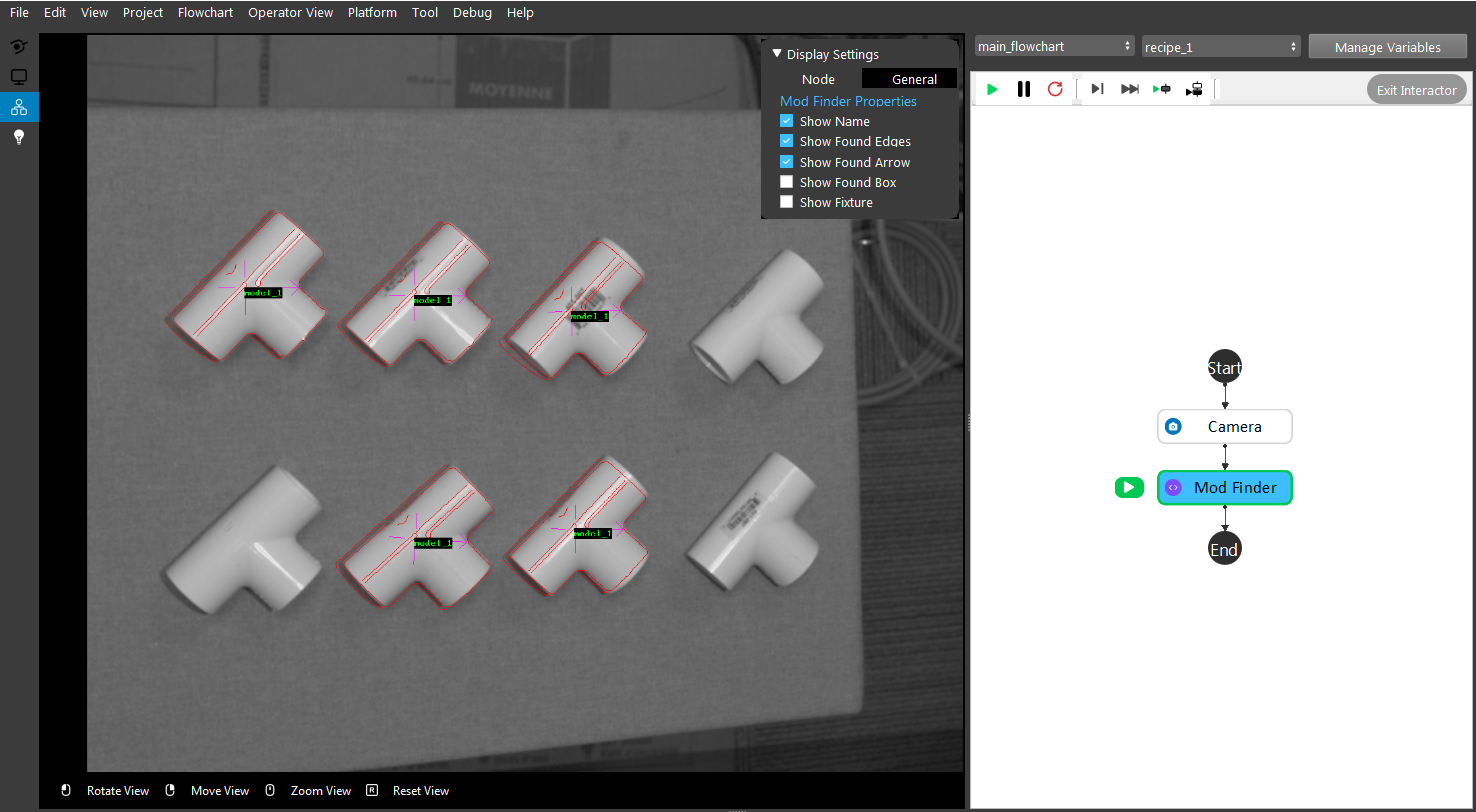

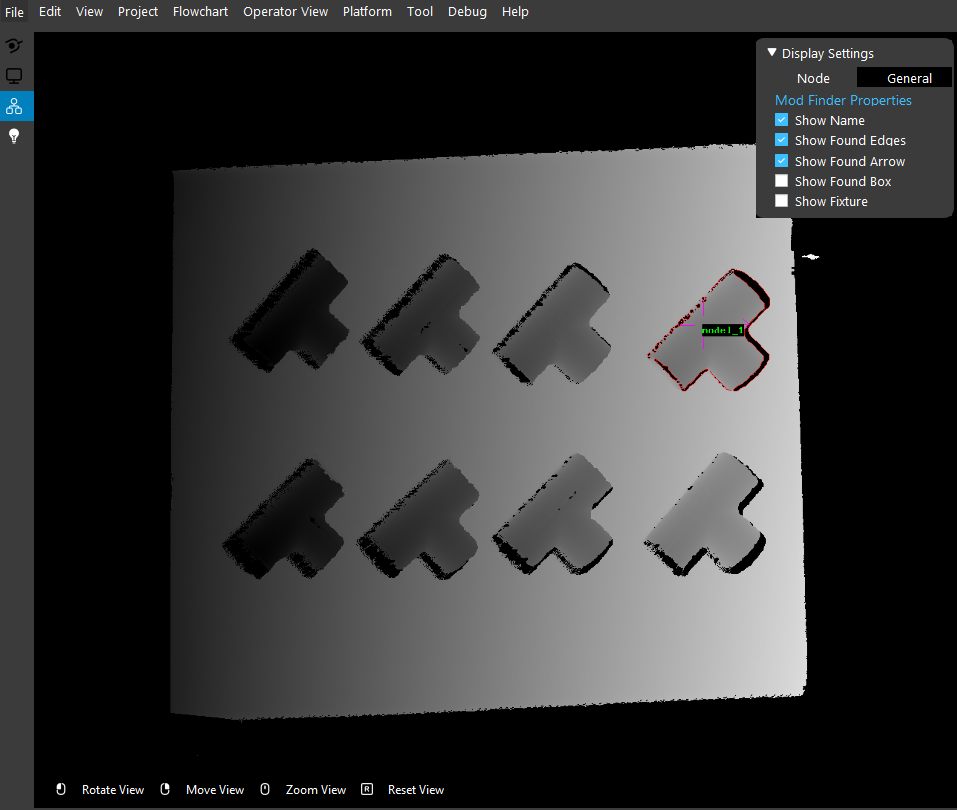

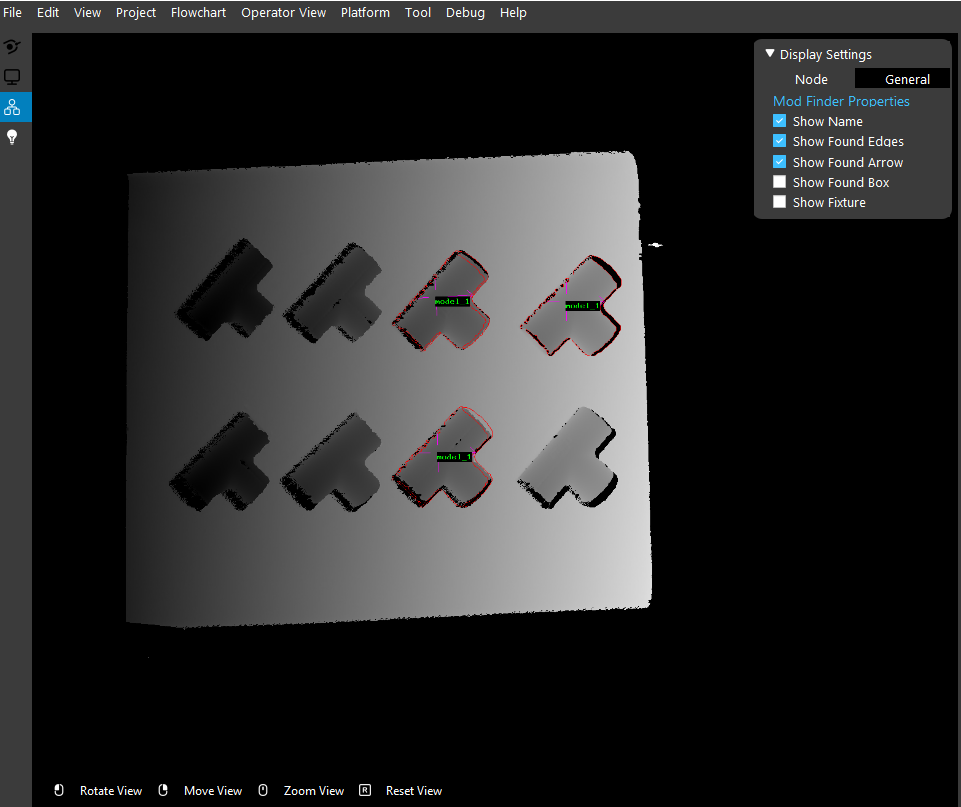

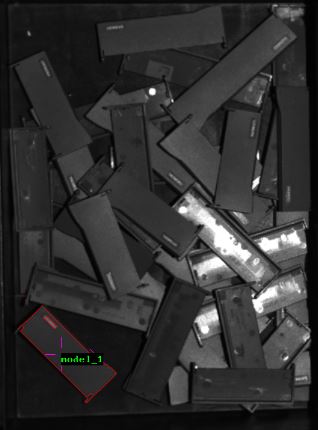

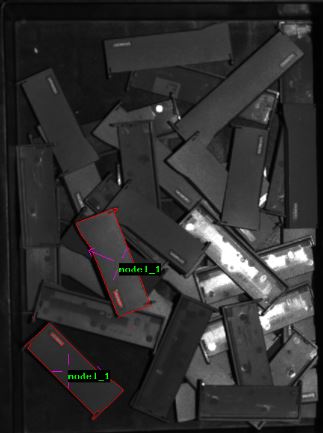

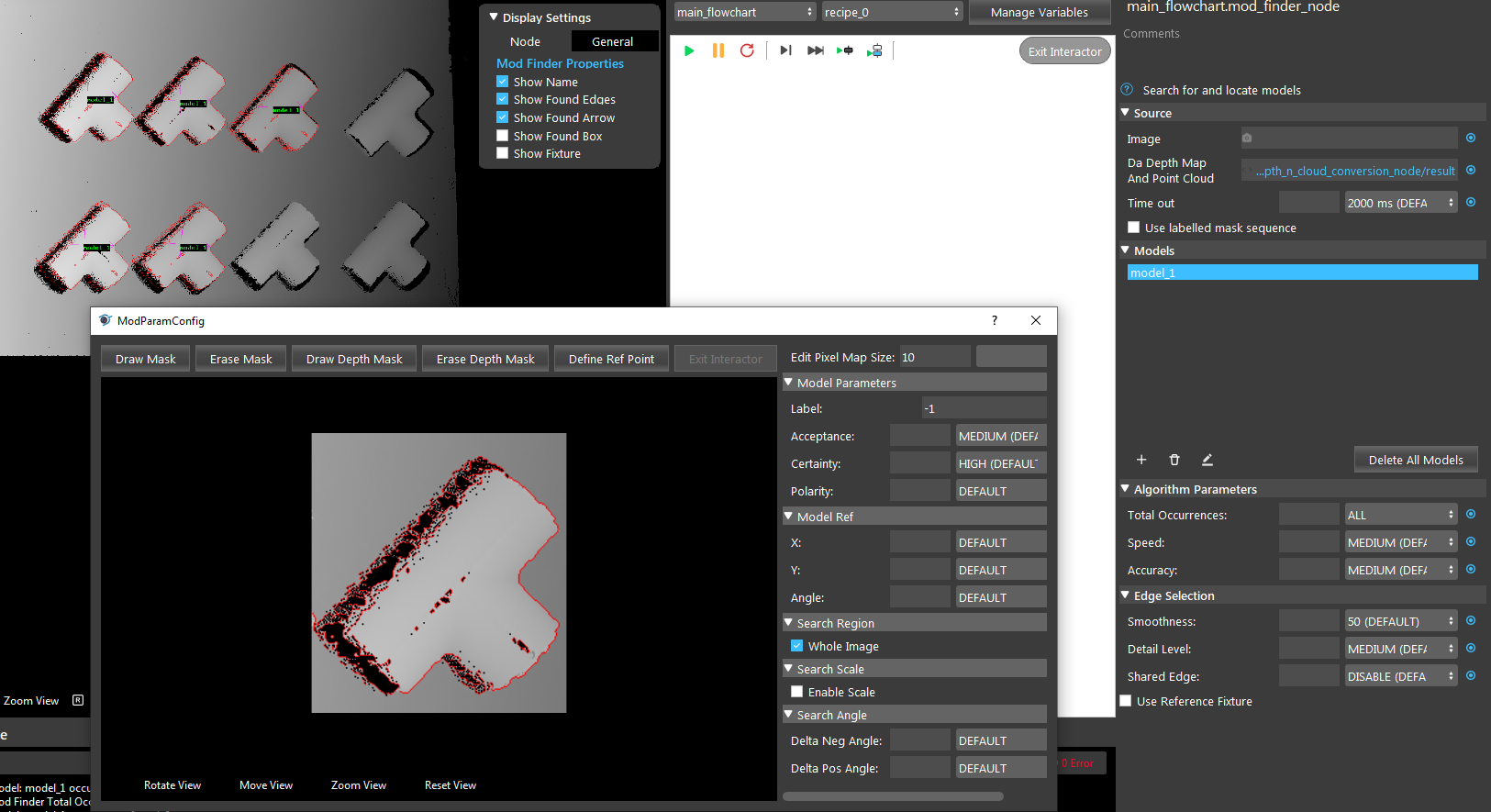

(left: occurrences = 1, middle: occurrences = 2, right: occurrences = ALL)

Speed: Range [1,4] (Default value: MEDIUM)

The speed of searching. Larger value means faster speed but less accuracy.

Accuracy: Range [1,3] (Default value: DISABLE)

The accuracy of searching. Larger value means higher accuracy but slower speed.

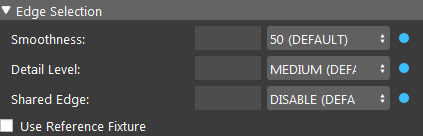

Edge Selection Parameters

Smoothness: Range [0,100) (Default value: 50)

Detail Level: Range [1,3] (Default value: MEDIUM)

The level of detail during edge extraction. The detail level determines what is considered as an edge/background. A larger value means more edges are extracted.

Shared Edge: Range [0,1] (Default value: DISABLE)

Whether edges are shared between different occurrences.

Models

The model is defined from the scene, or it can be imported from a DL_Segment node using a labelled mask sequence. For the details of the defining or import process, please check the “Procedure to use” section. This section focuses on the properties of models.

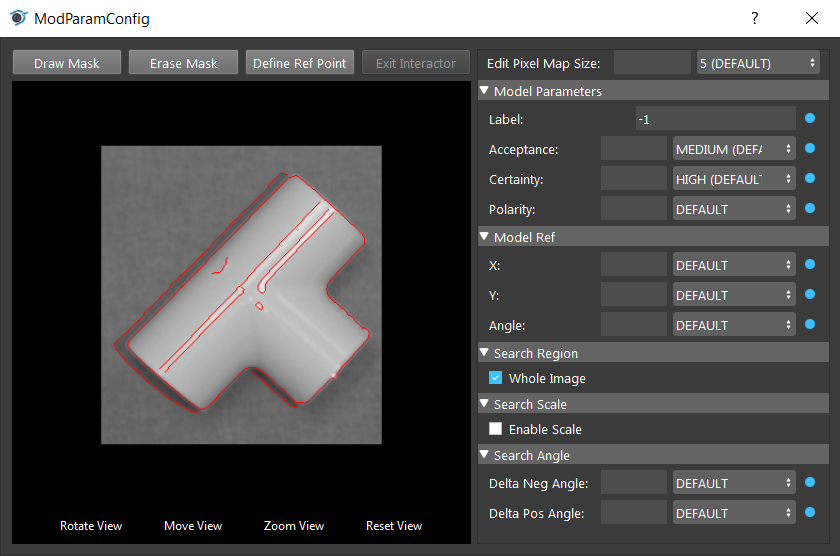

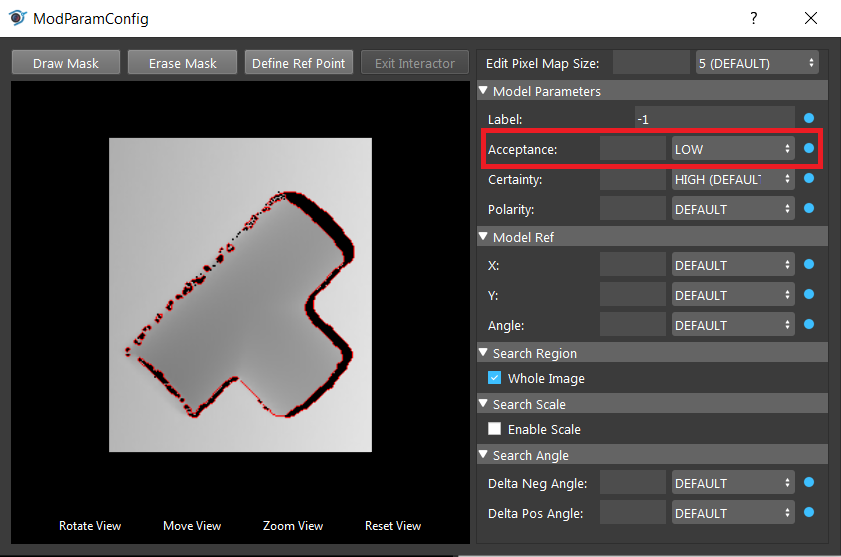

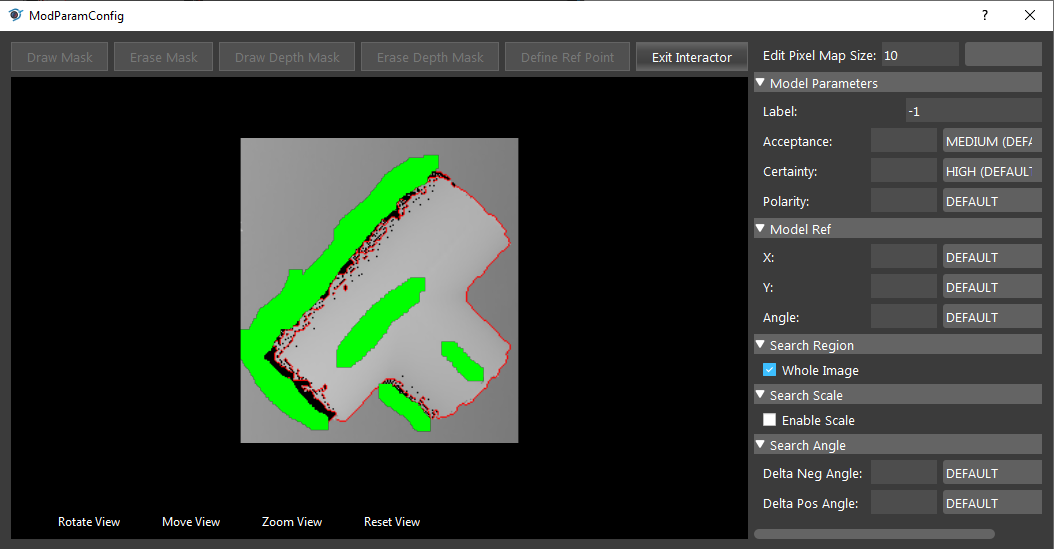

You can adjust the details of the model in the model config page. Double click or select model and click edit button to open model config page.

Label: (Default value: -1)

To distinguish model from the DL segmentation node when labelled mask sequence is used.

Acceptance: Range [0,100] (Default value: MEDIUM)

The minimum matching score where an occurrence is accpeted. An occurrence will be returned only if the match score between the target and the model is greater than or equal to this level.

Certainty: Range [0,100] (Default value: HIGH)

Sets the certainty level for the score, as a percentage. If both the score and target scores are greater than or equal to their respective certainty levels, the occurrence is considered a match, without searching the rest of the target for better matches (provided the specified number of occurrences has been found).

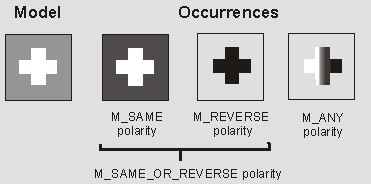

Polarity:

The expected polarity of occurrences, compared to that of the model. If the model is a white circle in black background, “SAME” will search for white circle in black background, “REVERSE” will search black circle in white background, and “ANY” will search any circle in any background as long as the it is an edge.

- scale:

100%

X, Y:

The offsets to set the reference point. The X and Y are the X-offset (in pixels) and the Y-offset (in pixels) of the origin of the model’s reference axis, relative to the model origin. The top left corner is (0, 0).

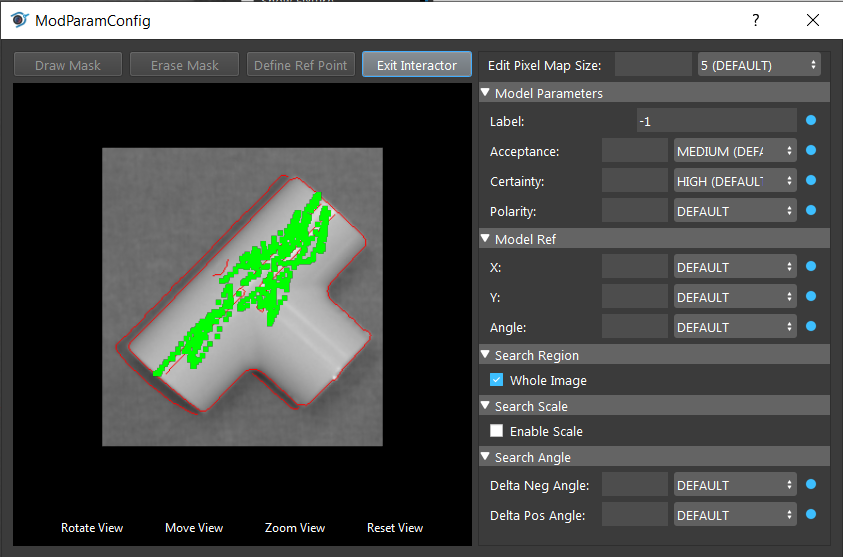

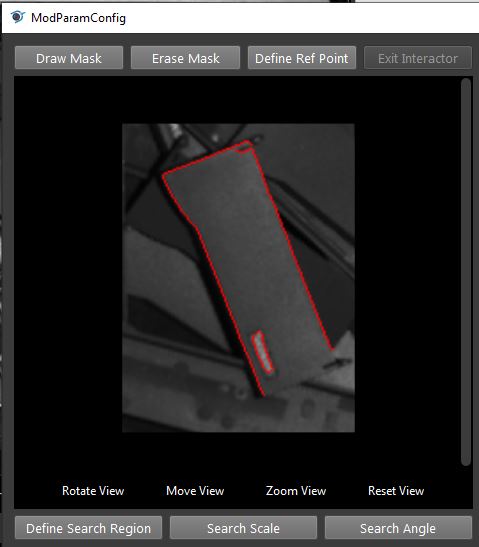

You can also define reference points in the model image by clicking the “Define Ref Point” of the model. This will enter interactor mode where you need to select a point as a reference point. Normally the reference point is the center of all the edge pixels. It is recommended to use the default reference point.

Angle: Range [0, 360]

The nominal search angle in degrees. This is the angle to find the model’s reference axis.

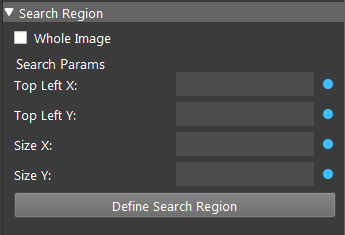

Search Region: (Default value: Whole Image)

The search region can be set to the “Whole Image” or a specified region of the Image.

- Top Left X:

The top left starting X pixel value.

- Top Left Y:

The top left starting Y pixel value.

- Size X:

The X dimension size of the search area in pixels.

- Size Y:

The Y dimension size of the search area in pixels.

For example, if the interested search region is in the top left corner of the image with a size of 1000*1000 pixels, the parameters should be set as (0, 0, 1000, 1000).

Alternatively, you can define the search region in the target image by clicking “Define Search Region” and draw a rectangular ROI on target image.

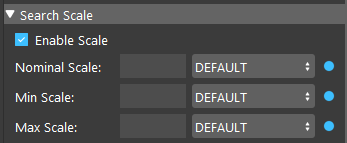

Search Scale:

Sets whether to search for only models within a specified scale range.

- Nominal scale: Range [0.5, 2.0] (Default value: 1.0)

The nominal scale used in scale range calculations.

- Min scale: Range [1.0, 2.0] (Default value: 2.0)

The min scale used in scale range calculations.

- Max scale: Range [0.5, 1.0] (Default value: 0.5)

The max scale used in scale range calculations.

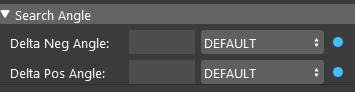

Search Angle:

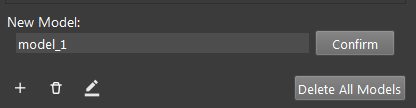

Procedure to use

2D Mod Finder Example

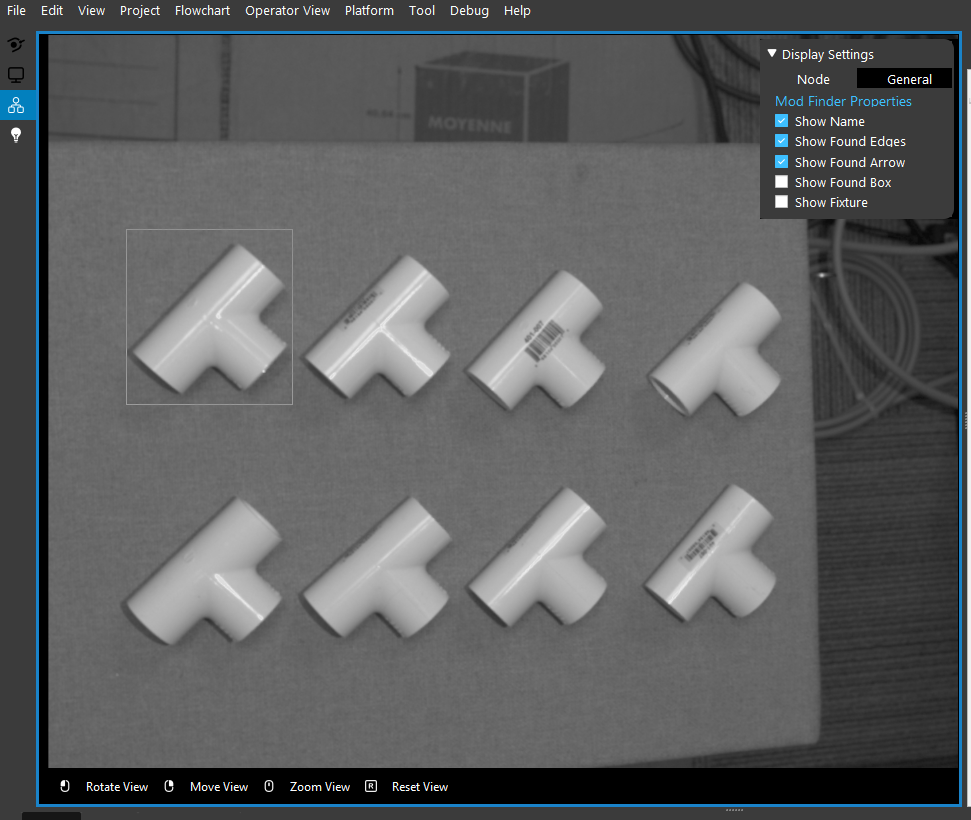

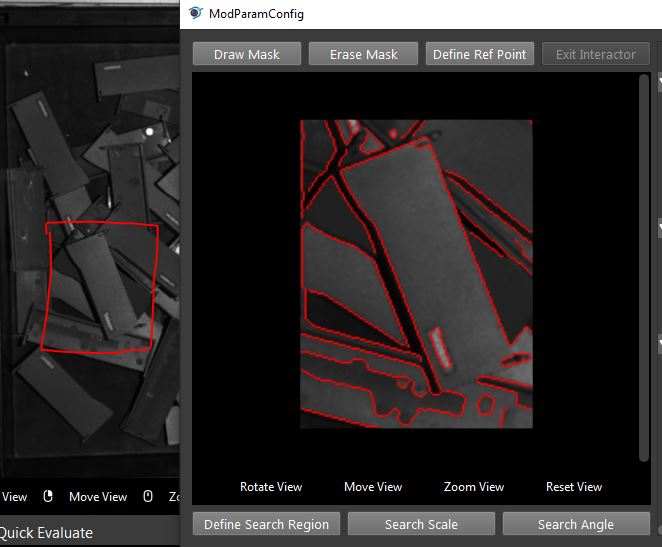

9. The display on the left window enters Interactor mode. Use mouse carefully select the region that contains the desired model.

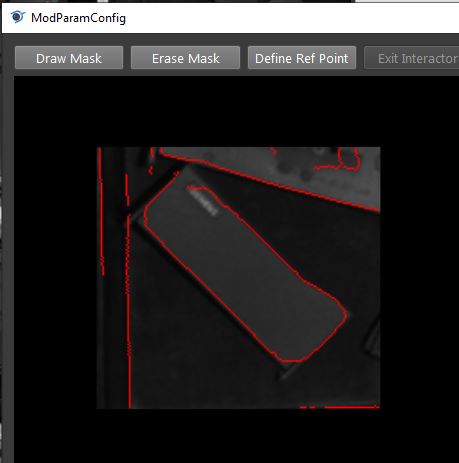

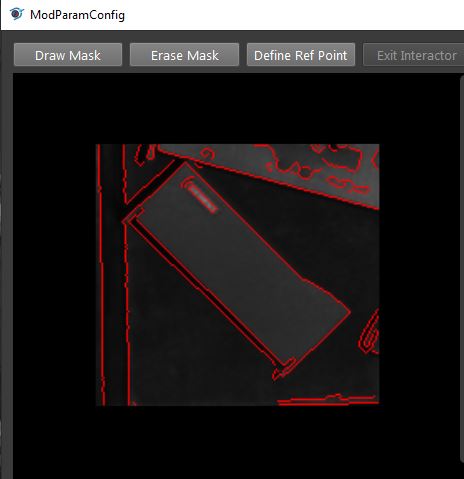

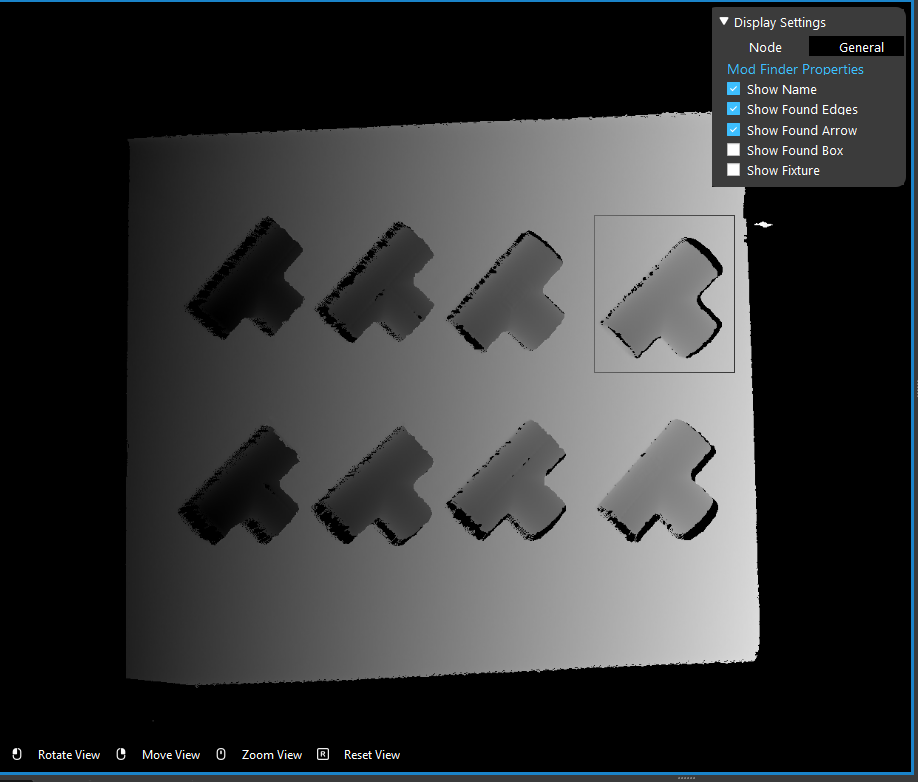

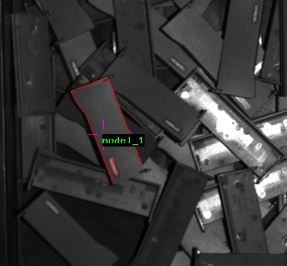

- Run the Mod Finder node. The edges of found objects are extracted by red line in the image display.

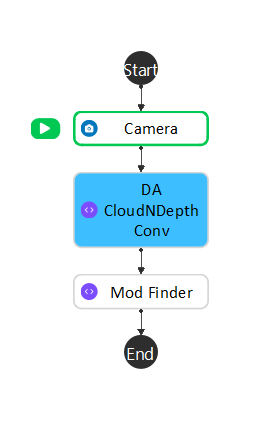

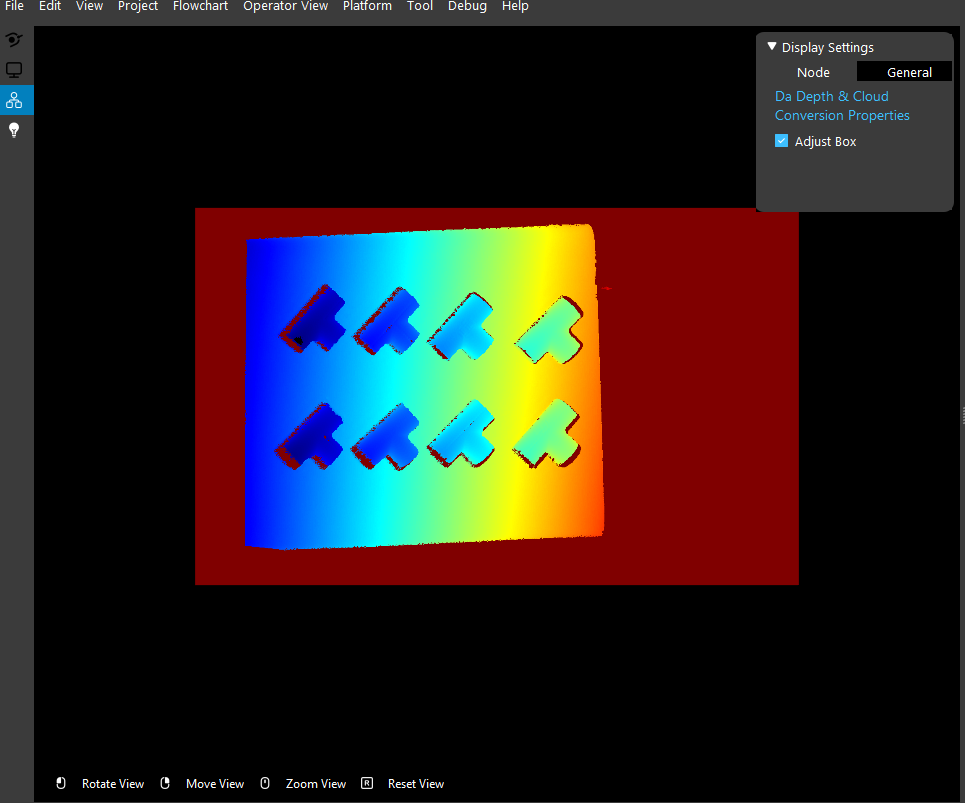

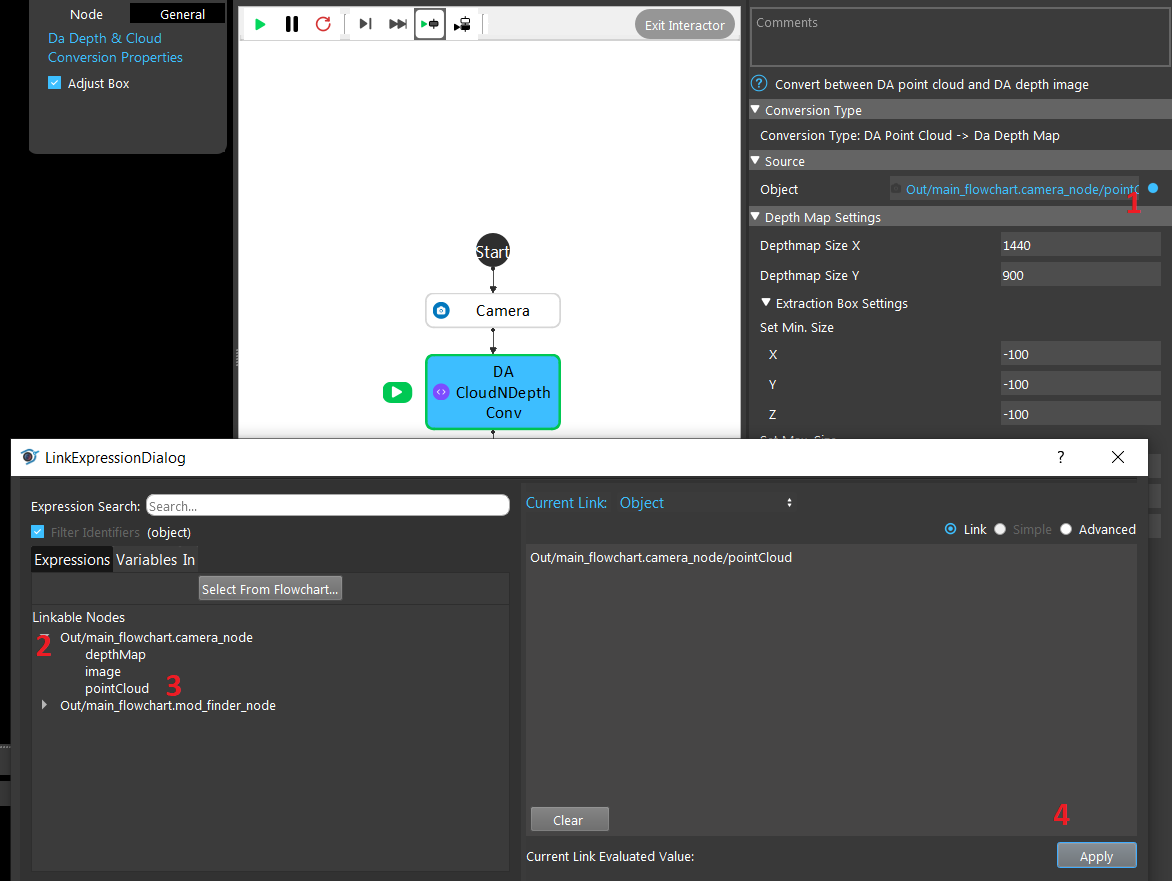

3D Mod Finder Example

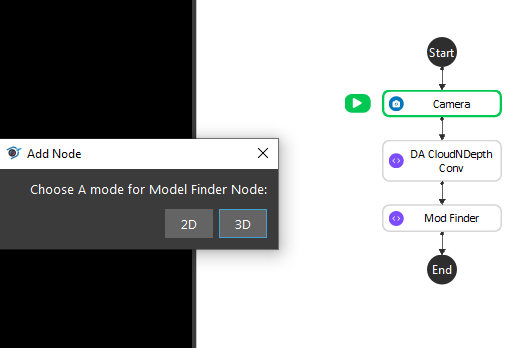

Repeat the step 1 to 3 from the above example.

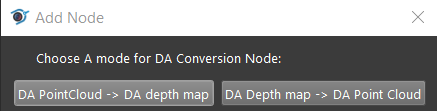

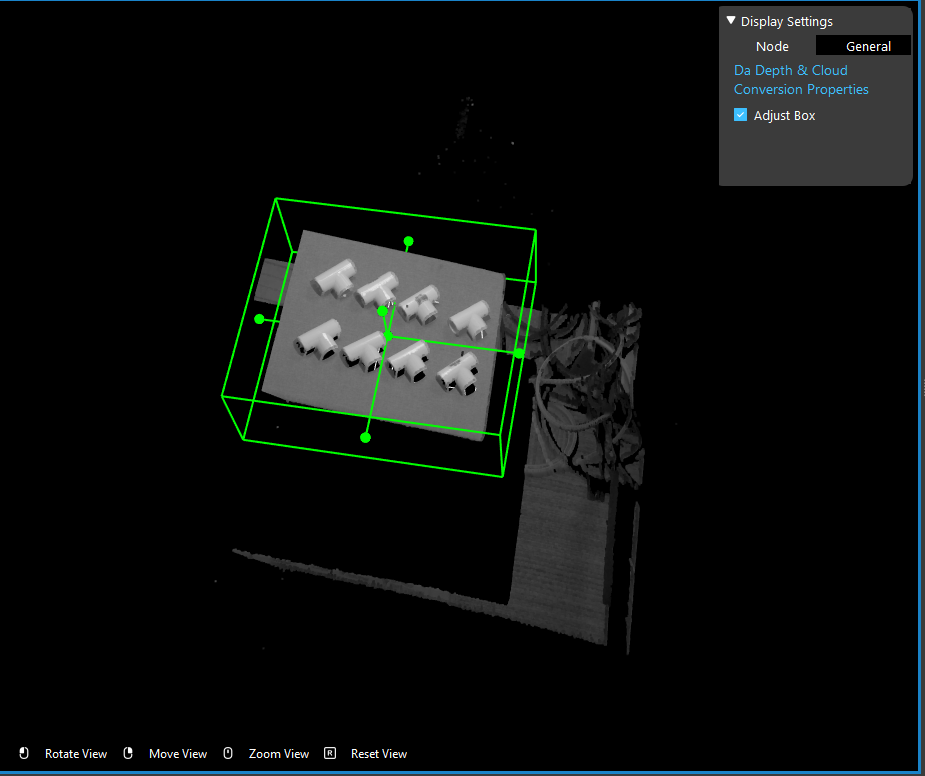

3. The 3D Mod Finder takes a “Da Depth Map And Point Cloud” as input. Insert a “DA CloudNDepth Conv” node under Camera node. Select “DA PointCloud -> DA depth map” when creating the node.

- Link the point cloud from the above camera node as the input of the “DA CloudNDepth Conv” node.

General process of Using Mod Finder Node

Link input model image. For 3d mod finder node, the input must be the output of DA CloudNDepthConv Node.

Run node once so the input image shows on the display.

Define model, click on “+” button to add a model, and draw a bounding box in the input image to define the model.

(Optional) adjust model in the model config page.

Run the node with other images. The found occurrences of the model will be displayed in the target image.

Use the position vector for further processing to get the picking pose of the objects in the scene.

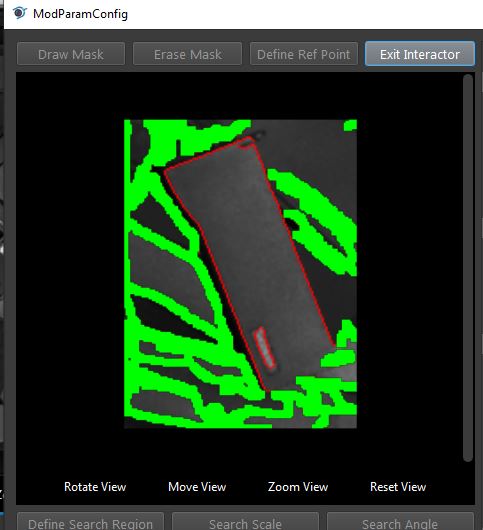

Model Masking

The model in this node is edgel model. Edge model use their edge-based geometric features (geometric features from extracted edges) to find the position of object.

Search Model In Labelled Mask Sequence

This is a special use case of mod finder where the input is the result of segmentation (a vector of image, each contains an object) instead of a single image.

Use DL segmentation node to obtain segments and their labels.

In mod finder node, define models. Assign correct labels to the models.

Check “Use Labelled Mask Sequence”, and link the labelled mask sequence to mask sequence output of of the DL segmentation node.

Run the node. For each mask image in the sequence the node will search for the model based on the model of the mask image (label of the segment).

The result pose (sorted in labelledPose2dSequence or labelledPose3dSequence) will have the same order of the segments vector of the DL segmentation node.

Reference Fixture

Please refer to Reference Fixture System.

This node can be used for generating fixture.

Excercise

Try to come up with the setting on Mod Finder node according to the requirements below. You can work on these exercise with the help of this article. We also have answers attached at the end of this exercise.

This is some helpful resource when you are working on the exercise:

Scenario 1

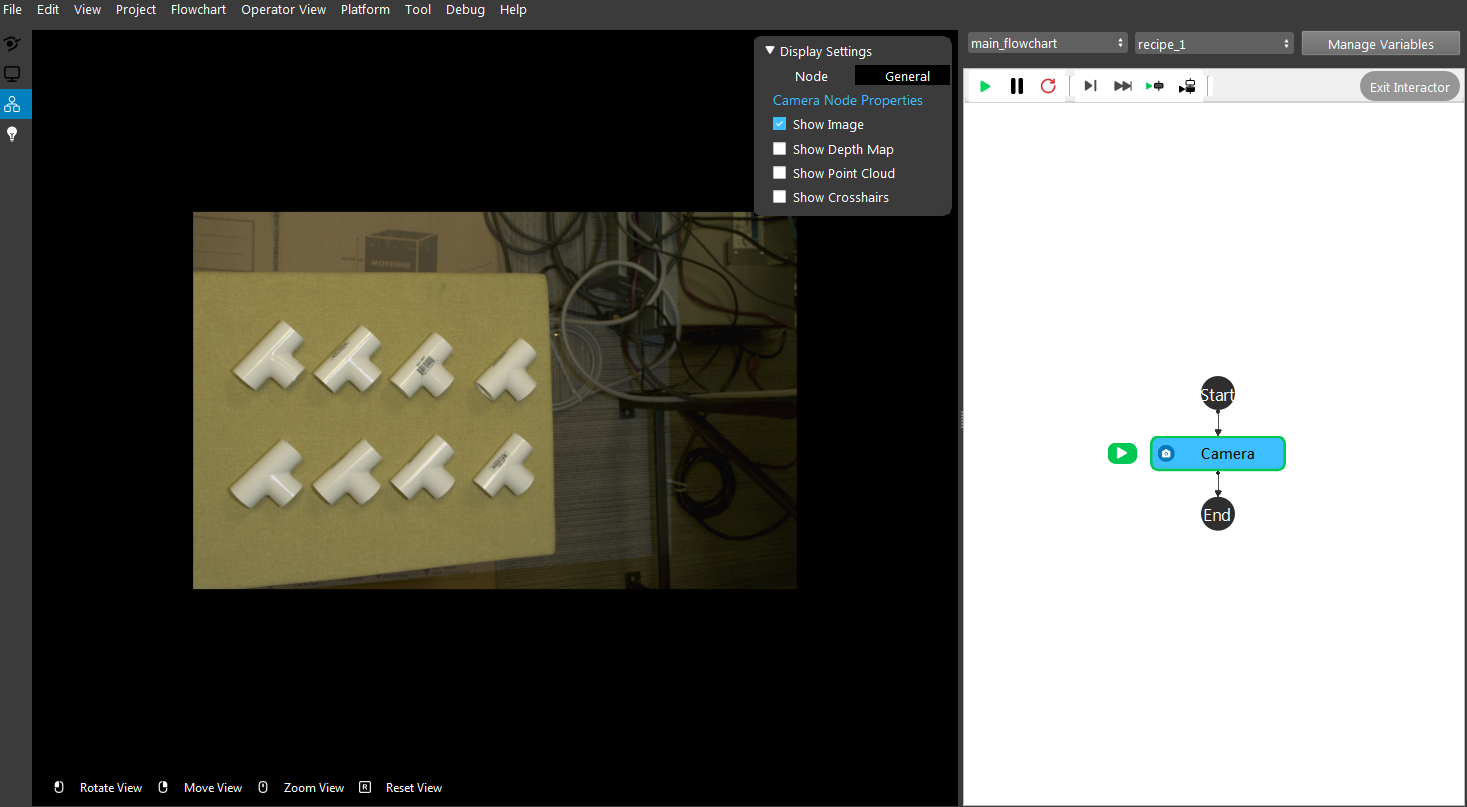

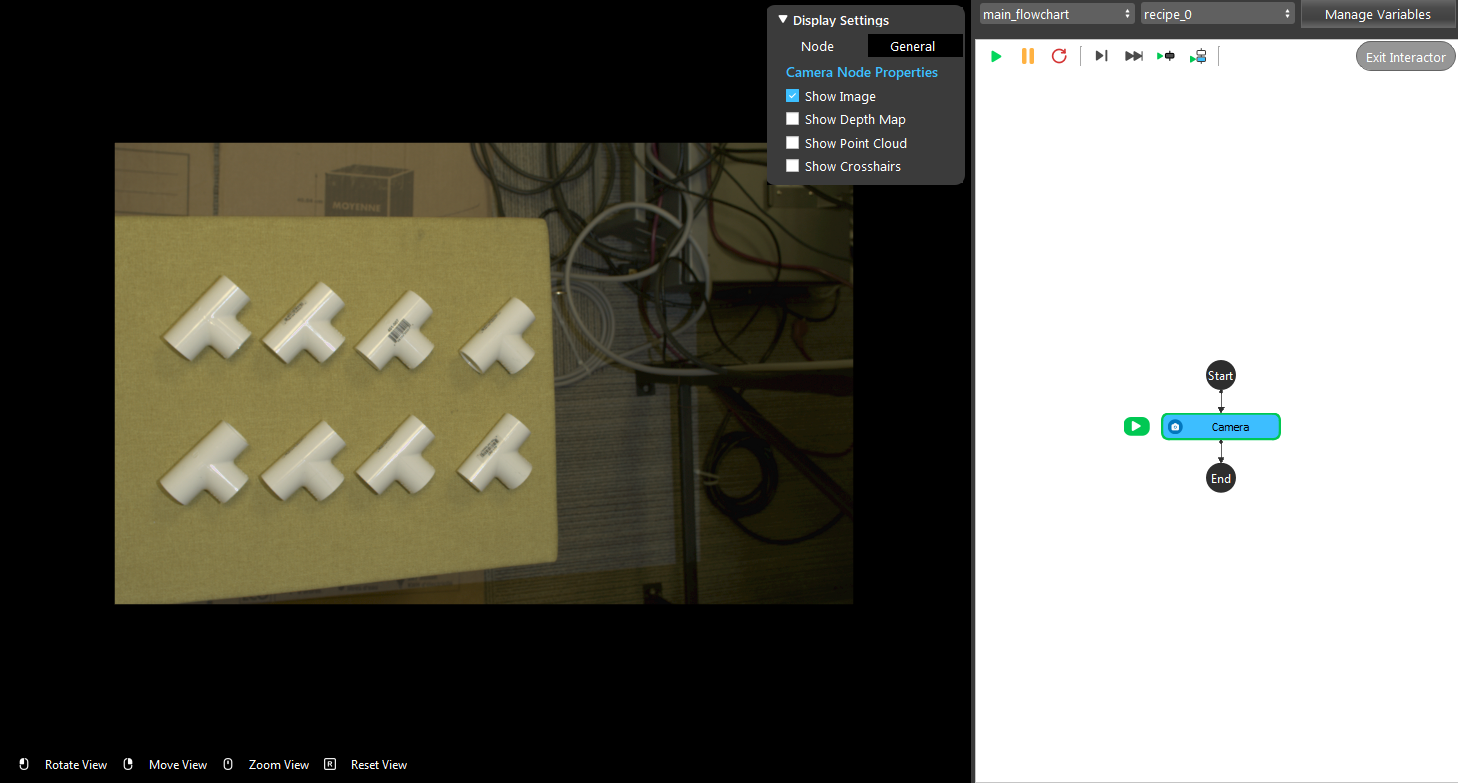

There is a project which requires the robot to pick all the occurrences of the T-tube in scene. Your colleague has setup the 3D camera and robot in the lab for experiment. Here’s a link to .dcf file which are used as camera input.

You need to help him setup the Mod Finder node in main_flowchart. Please choose the all correct answers from the options:

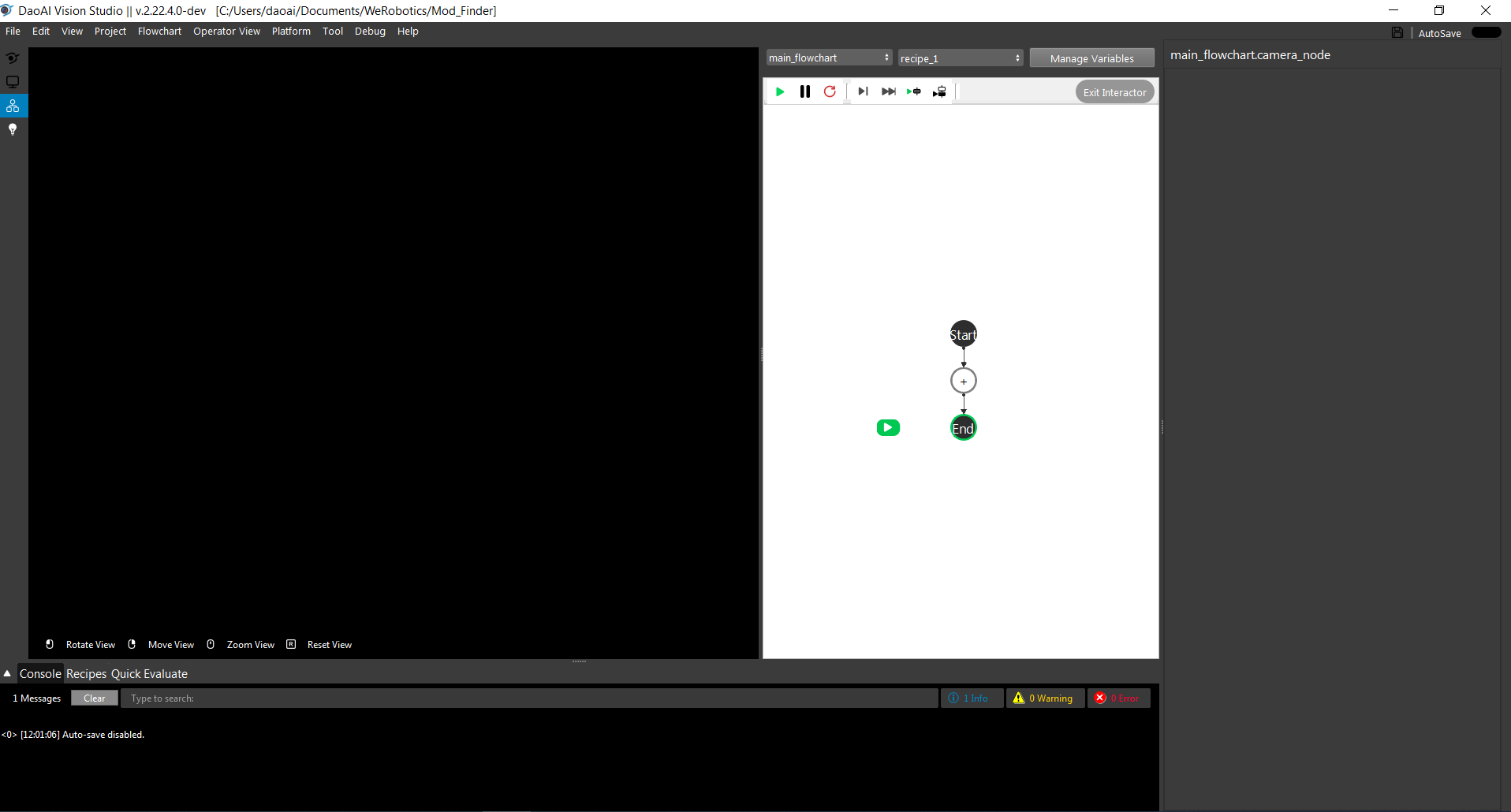

- Shown in the following image, the camera node is set up for you, suppose you were asked to use a mod finder(3D) to detect the object in the image. How should you set up the node?

Right click Camera node and insert Mod Finder node, then click 2d from the dialog.

Right click Camera node and insert Mod Finder node, then click 3d from the dialog.

Right click Camera node and insert DA CloudNDepth Conv node + Mod Finder node, then click 3d from the dialog.

Right click Camera node and insert ModFinder3D.

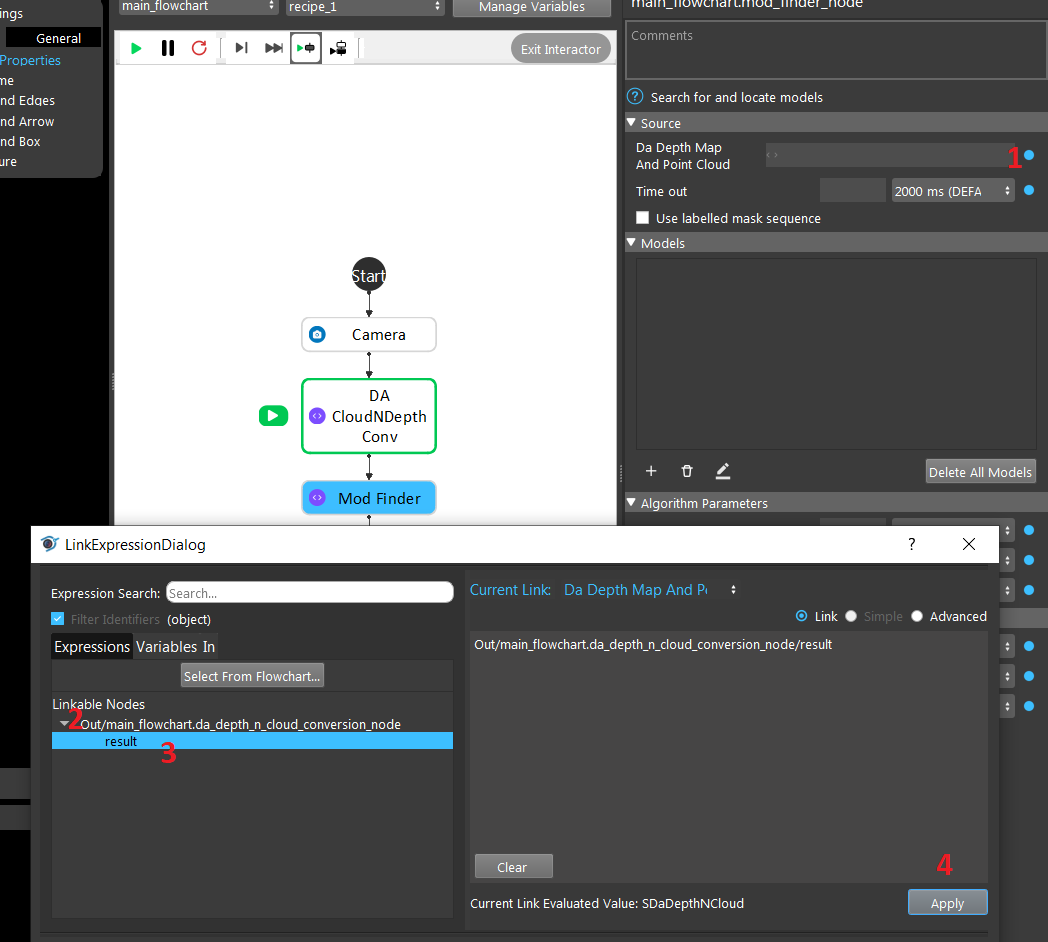

- Shown in the following image, you have created the mod finder (3D) node, and you want to setup the input for the node. How do you do this?

Click on the blue dot next to “Image” and link to Camera node.

Click on the blue dot next to “Da Depth Map and Point Cloud” and link to Camera node.

Click on the blue dot next to “Image” and link to Da CloudNDepth Conv node.

Click on the blue dot next to “Da Depth Map and Point Cloud” and link to Da CloudNDepth Conv node.

- Shown in the following image, you have captured a model, but you find that the features detected contains noise, and it failed to detect all T-tubes in the image. How do you remove the noise from model.

Double click the model and draw mask on the model.

Change Edge Selection -> Smoothness from Default to 80.

Change Algorithm Parameters -> Accuracy from Default to Low.

Double click the model and draw Depth mask on the model.

Answers for Excercises

Scenario 1

Answer: C

Explanation: 3d Mod finder must take inputs from DA CloudNDepth Conv node, therefore you need to insert a DA CloudNDepth Conv node and followed by Mod finder node and choose 3d.

Answer: D

Explanation: As mentioned in (ans 1), Mod Finder (3D) must take input from DA CloudNDepth Conv node, hence the corresponding input should be Da Depth Map and Point Cloud. Which clicking the blue should link to DA CloudNDepth Conv node.

Answer: A

Explanation: While modifying smoothness and accuracy does effect the tolerance of detection, they do not directly effect on the model’s feature. Drawing masks, on the other hand, directly removes the noise in the model.