You're reading the documentation for a development version. For the latest released version, please have a look at master.

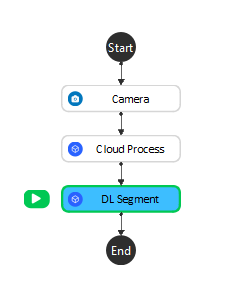

DL Segment Node

Overview

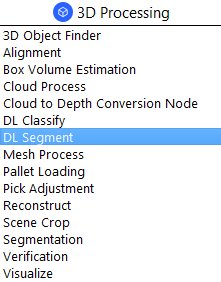

The Deep Learning (DL) Segment Node offers state-of-the-art segmentation of point clouds using pre-trained models.

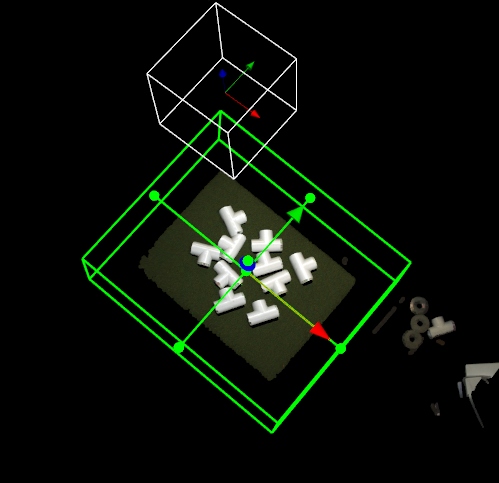

DL segmentation can be performed on one of point cloud, image, or both. Note that DL segmentation works best when the input contains background information around the object. In pre-processing, avoid using filters like color filter that may remove the background and only keep the object.

Input and Output

Output |

Type |

Description |

|---|---|---|

bboxResults |

vector<Box2f> |

A vector of bounding boxes of the segments. Each element contains top-left, bottom-right coordinate of each bounding box. |

maskOnScene |

Image |

|

maskedScene |

Image |

RGB image showing all segments in input image. |

numDetected |

int |

Number of segments detected. |

segmResults |

vector<Segm> |

|

segmentLocations |

vecPose2D |

|

segmentMasks |

vecImage |

|

segmentRGBs |

vecImage |

Images of obtained segments. |

success |

bool |

Whether the segmentation is successful (found at least one segment). |

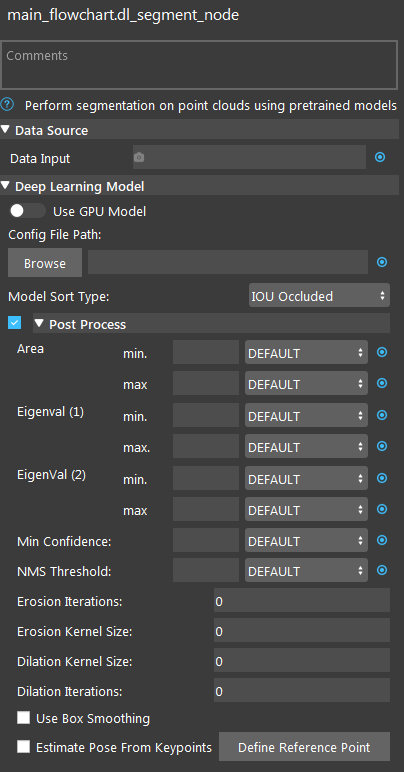

Node Settings

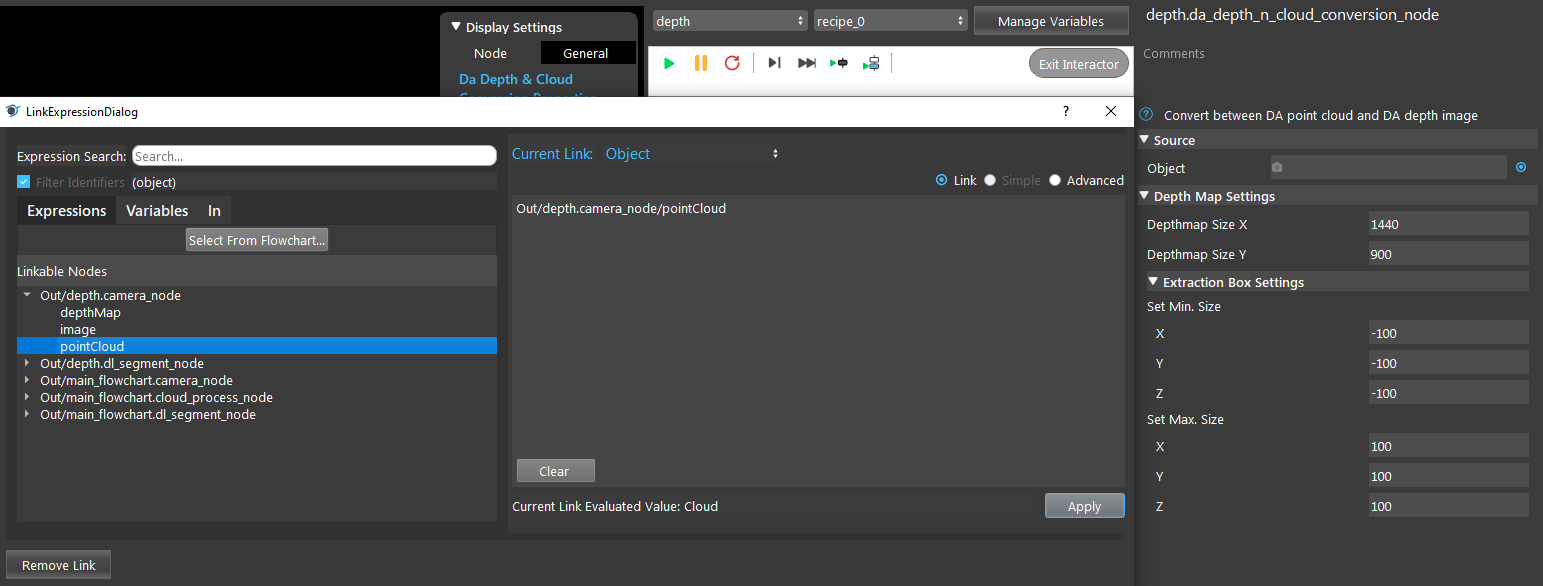

Data Source

- Data Input:

- The input scene to generate the segmentation from.

If RGBD, use point cloud.

If RGB, use image or point cloud.

If Depth, use depth image.

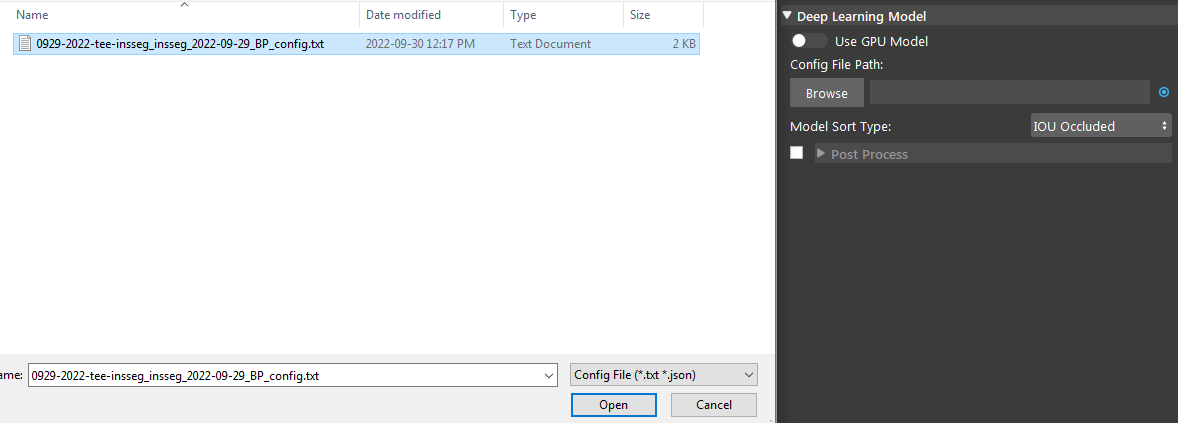

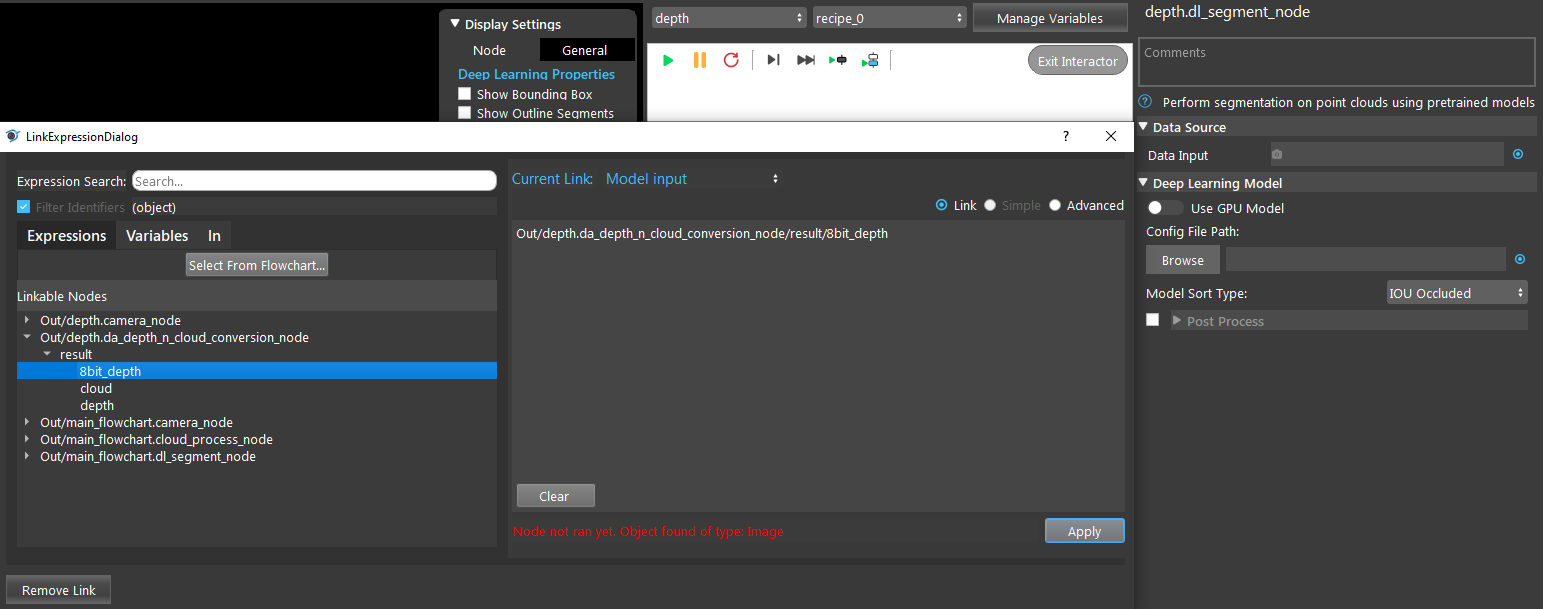

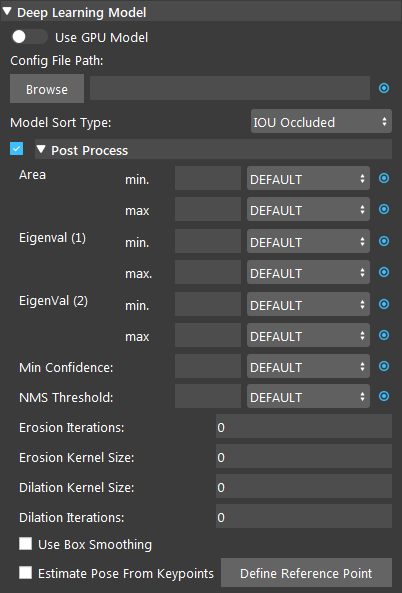

Deep Learning Model

- Use GPU Model (Default: false)

The GPU Model will be used if the option is toggled on. The CPU Model will be used if not toggled on.

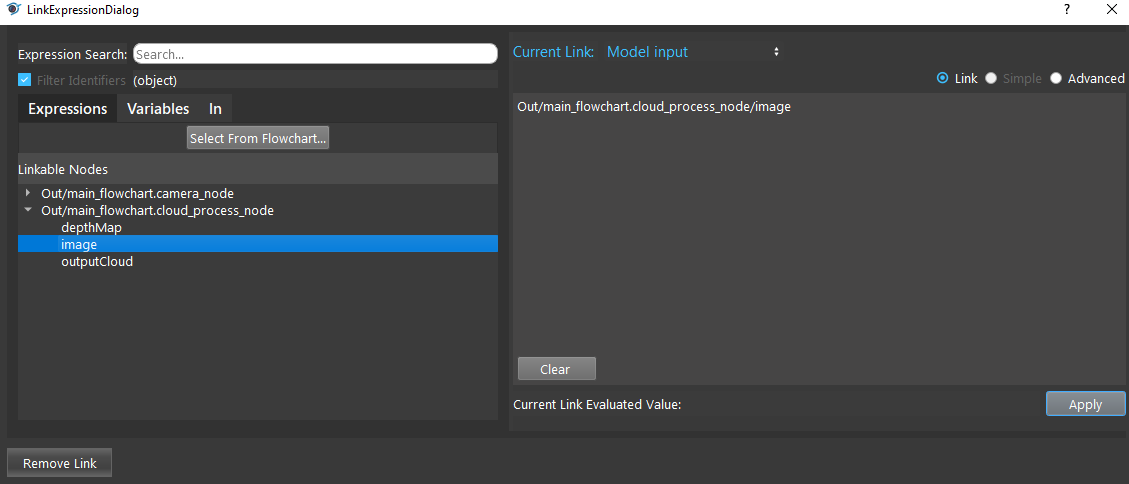

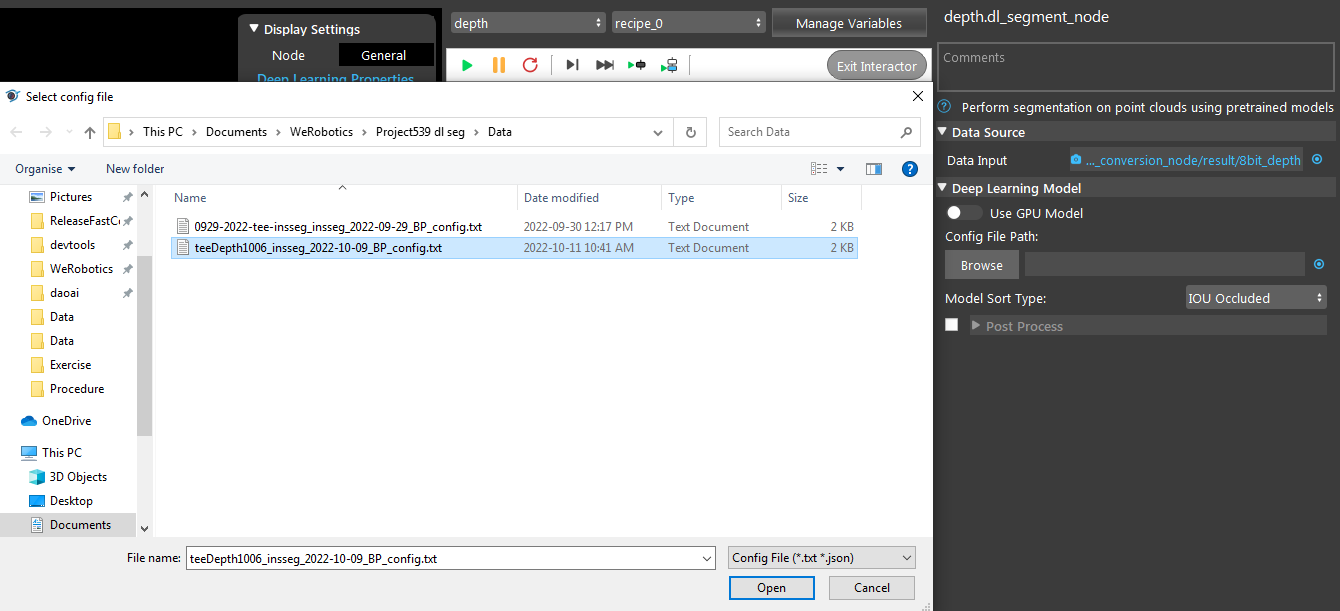

- Config File Path

The path to deep learning config file (.txt).

- Model Sort Type (Default: IOU Occluded)

- Set the model sort type of the result. Select from one of IOU Occluded, Binary Occluded, Area.

IOU Occluded: sort by calculating Intersection over Union (IOU) between segments to determine overlapping percentage, and determines which segment occupies the most amount of area in the overlapping region. So that we pick up the objects that are not occluded first.

Binary Occluded: similar to IOU Occluded, but uses two values to denote if any other segment overlaps a given segment.

Area: sort by area size.

- Area min./max. (Range: [0,1.0])

Avaiblae in Post Process. Values which represent the percentage of the image and segment can occupy.

- Eigenval (1)/(2) min./max. (Range: [0,1])

Avaiblae in Post Process. Similar to how the segmentation calculates the Principal Component Analysis (PCA) of each segment we calculate that here and limit the eigen values. Eigen value 1 is the longer axis.

- Min Confidence (Range: [0,1])

Avaiblae in Post Process. The minimum confidence for detecting predicted objects.

- NMS Threshold (Range: [0,1]; Default: 0.8)

Avaiblae in Post Process. The threshold for applying soft NMS to the bounding boxes. This removes boxes that are too close together.

- Erosion/Dilation Kernel Size (Range: [0, 100]; Default: 0)

Avaiblae in Post Process. The kernel size used for erosion/dilation applied to segmentation mask and segmentation RGB image.

- Erosion/Dilation Iterations (Range: [0, 100]; Default: 0)

Avaiblae in Post Process. The number of times erosion/dilation is applied to segmentation mask and segmentation RGB image.

The initial result of the DL segmentation node may not contain all the points of the object because many deep learning model use dowmsampling for prediction. You can adjust the segment mask size based on your need (to reduce possible noise or to include as much object information as possible) using erosion and dilation post-processing option.

Below image shows the result of using erosion, the resulting mask is smaller than the actual object.

- Use Box Smoothing (Default: false)

Avaiblae in Post Process. Whether to smooth the segment mask in to a boxed shape or not. Useful for boxed shape objects with non smooth masks.

- Estimate Pose From Keypoints (Default: false)

Avaiblae in Post Process. Calculate a pose for the object based on the key points located in the image. Available for the keypoint model type.

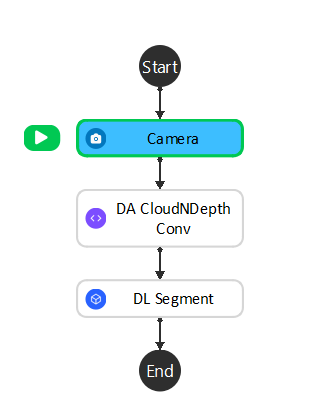

Procedure to Use

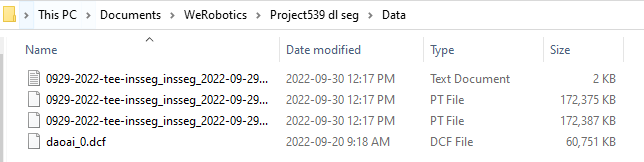

Here is the link to the files in this demonstration .

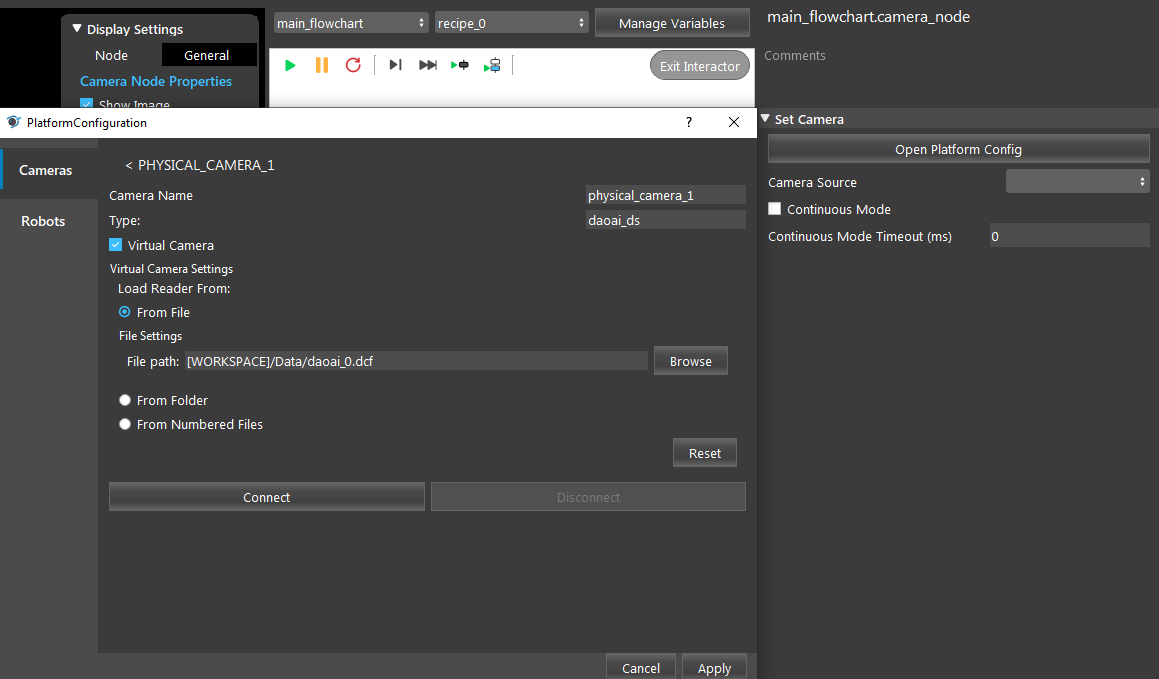

- Add a virtual Camera with the file daoai_0.dcf. Please refer to Camera Node for more detailed instructions.

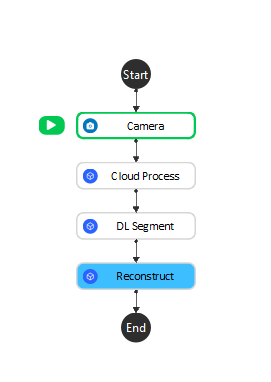

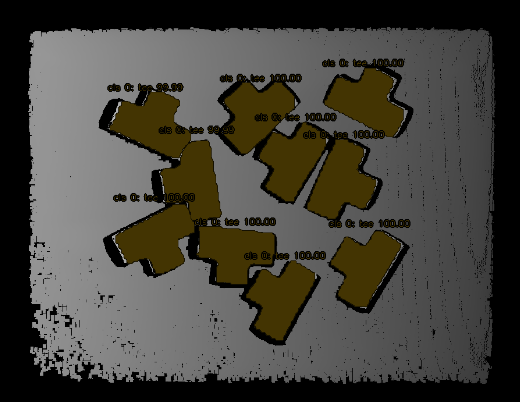

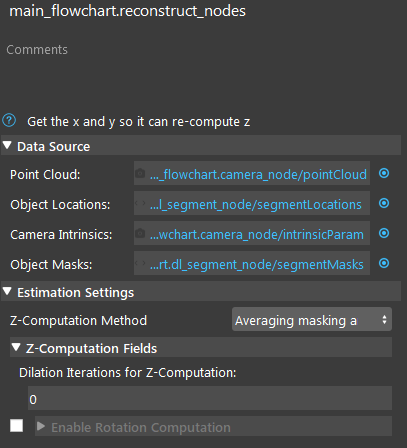

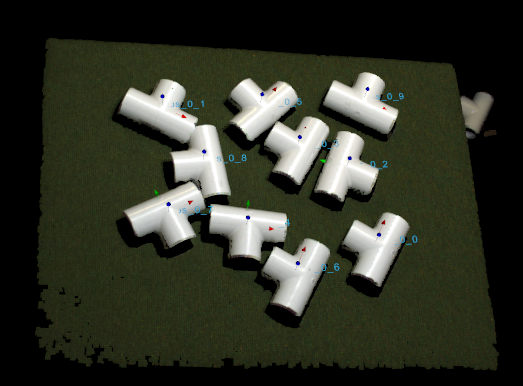

After segmentation, there are a couple ways you can make use of its output. For example, you can use the center point of the segments as the 2D positions of object and estimate their 3D positions using Reconstruct node and use these 2D/3D poses to determine picking positions. Other than Reconstruct, you can also use Scene Crop to divide a scene point cloud into a vector of point clouds, each contains point cloud of one object. We will demonstrate using Reconstruct here.

- Link Camera’s pointCloud output as Point Cloud, DL Segment’s segmentLocations as the Object Locations, Camera’s intrinsicParam as Camera Intrinsics, and DL SegmentMasks as the Object Masks. For Z-Computation Method, choose Averaging masking area. Run the node, you can see the objects’ pose from the display.

Exercise

- You have a depth model at hand, try to setup the flowchart.

Answers for Exercise

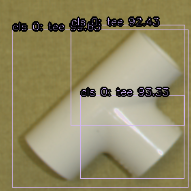

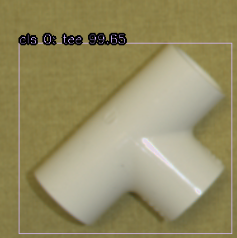

1. You can filter out the less confident results by changing the “Min Confidence” value in Post Process. Set Min Confidence to 0.96 gives the following result: